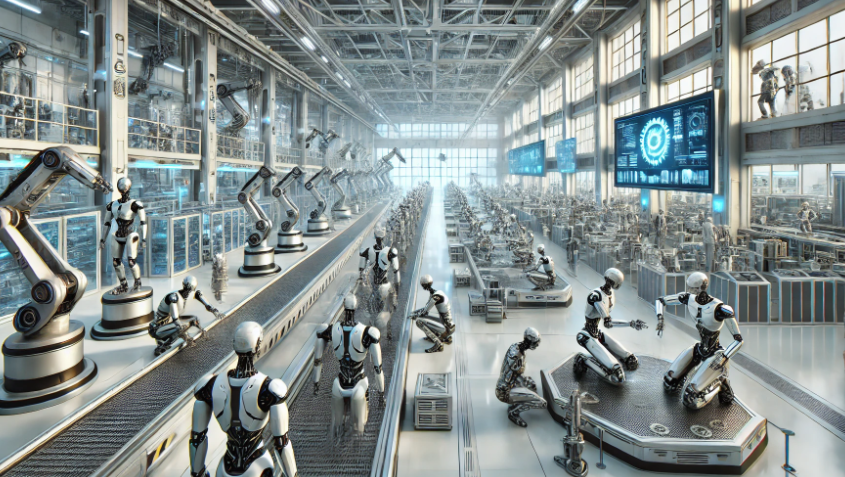

As artificial intelligence (AI) continues its relentless march, a heated debate rages over its potential impact on employment. On one side are the techno-optimists who view AI as a tool to augment human capabilities and create new opportunities. On the other are the doomsayers, sounding alarms over imminent job displacement on a massive scale.

The concerns are certainly understandable. From translation to transcription, content creation to coding, AI systems are rapidly encroaching into professional domains once thought impervious to automation. A court reporter laments how AI-powered speech recognition could render their trade obsolete, while a copywriter was bluntly told their services are no longer needed thanks to AI text generation tools.

Such anecdotes stoke fears that AI will be the ultimate job killer, rendering human labor superfluous across multiple industries. After all, if an AI can outperform humans at an ever-expanding array of cognitive tasks, what’s to stop it from steadily subsuming more roles? The notion of unchecked AI eating away at employment prospects is an understandably chilling scenario.

However, those dismissing such worries as another bout of “tech panic” have a point. Throughout history, new technologies have consistently catalyzed workforce shifts without causing permanent, widespread joblessness. From agricultural machinery to automated manufacturing, innovation has continually disrupted some roles while birthing new ones — a creative destruction of sorts.

One historical instance where technology significantly impacted employment was during the Industrial Revolution. The introduction of mechanized manufacturing, particularly in textile production, led to widespread job losses among traditional craftsmen and manual laborers. This shift towards factories equipped with advanced machinery displaced many workers, creating economic and social upheaval as people struggled to adapt to new forms of employment that required different skills.

The underlying assumption is that AI, like its predecessors, will simply reshuffle the employment deck rather than overturn it entirely.

Yet this view discounts a crucial distinction: While past innovations like tractors were tools to amplify human labor, AI is fundamentally different — it has the potential to fully replicate and surpass human cognitive capabilities across domains. Tractors, it should be noted, eliminated the need for 100 hours of manual plowing, while AI could supplant 10,000 hours of professional training and work.

This dichotomy hints at the heart of the debate. If AI becomes advanced enough to comprehensively automate roles requiring extensive training and expertise, from medicine to law to engineering, then the employment fallout could be seismic in a way not seen with previous technological transitions.

Of course, AI evangelists counter that such displacement will be offset by a proliferation of new, as-yet-unforeseen opportunities. Humans may be pushed out of roles, but smart policy and economic adaptation could funnel them into fields that capitalize on our uniquely human strengths while AI handles the more routine, procedural workloads.

Proponents also point out that even if mass job disruption does occur initially, an AI-powered economy would theoretically be so productive that it could sustain a model of abundant wealth and optional employment. Why toil away if hyper-intelligent AI can do our bidding while showering us with abundance?

This rosy scenario clearly has its appeal, it seems, but skeptics rightly question the governance and equitable distribution of such an AI-created bounty. Without mechanisms to ensure the dividends aren’t monopolized, an AI-defined haves-and-have-nots schism could lead to unprecedented wealth stratification.

Furthermore, without the economic empowerment afforded by traditional employment, how much autonomy would individuals truly have in an AI-centric world? Would a situation arise where you’d receive payment which will specify what you can and cannot spend it on, raising the specter of an infinitely empowered technocratic class dictating all aspects of society?

Clearly, there are no easy answers when it comes to squaring AI’s economic disruption with preserving human agency, dignity and socioeconomic mobility. What’s evident, however, is that simply handwaving legitimate AI job concerns is inadvisable. The transformative potential of this technology appears to be so profound that prudent governance will be required to harness its benefits while mitigating its social shockwaves.

Whether society succumbs to AI-induced unemployment Armageddon or charts a path towards an optimized human-machine symbiosis remains to be seen. But pretending this transition will be akin to tech paradigm shifts of old could prove to be a costly case of institutional myopia. The time to have a serious reckoning with AI’s societal ramifications is already upon us.