Insider Brief

- An analysis that shows that the gap between AI infrastructure investments and actual revenue has dramatically widened to a staggering $600 billion.

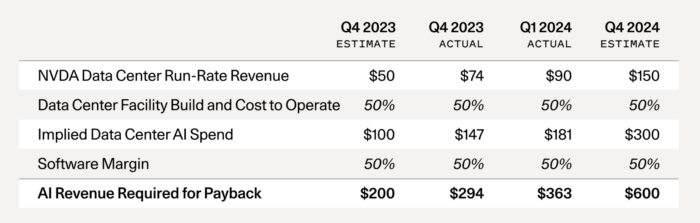

- In September 2023, Cahn showed that this gap was set at approximately $125 billion.

- This high of a capital expenditure, CapEx, may signal a bubble in spending on AI.

A Sequoia partner published an analysis that shows that the gap between AI infrastructure investments and actual revenue has dramatically widened to a staggering $600 billion.

This revelation comes as NVIDIA ascends to become one of the most valuable companies in the world, highlighting significant discrepancies in the AI ecosystem.

David Cahn, a partner at Sequoia, initially identified a “$125B hole” in the annual capital expenditure (CapEx) for AI back in September 2023. His latest analysis shows the gap has nearly quintupled.

Annual capital expenditure (CapEx) refers to the funds a company spends on acquiring, maintaining, or upgrading its physical assets such as buildings, technology, or equipment. This investment is essential for sustaining and expanding the company’s operations and capabilities.

A high CapEx indicates significant investment in physical assets to expand, maintain, or upgrade a company’s operational capacity. It typically suggests that the company is focused on growth, modernization, or large-scale projects, which is admirable. But when CapEx grows too high, it can result in a range of financial challenges, such as cashflow constraints and higher debt.

His updated calculations show that this gap has ballooned to $600 billion, raising critical questions about the true end-user value of AI technologies.

To give some idea of how a CapEx of $600 billion is, consider this: The total budget for the US Department of Defense in 2024 is approximately $842 billion. A $600 billion CapEx is nearly 71% of this figure. Leading tech giants like Amazon and Alphabet (Google’s parent company) have annual CapEx in the range of $40-$60 billion. A $600 billion CapEx is 10-15 times higher than the annual capital expenditure of these major corporations.

AI’s Explosive Growth

Since the initial analysis, the AI landscape has undergone substantial changes.

The severe GPU supply shortage that peaked in late 2023 has largely been resolved. Startups, which once struggled to secure GPUs, now find it relatively easy to acquire them with reasonable lead times. This shift has led to an increase in GPU stockpiles, with NVIDIA reporting that about half of its data center revenue in Q4 came from large cloud providers. Microsoft alone accounted for approximately 22% of NVIDIA’s Q4 revenue.

Hyperscale CapEx has reached historic levels, as Big Tech continues to invest heavily in GPUs, indicating sustained demand for AI infrastructure.

OpenAI remains the dominant force in the AI revenue landscape, with its revenue soaring to $3.4 billion from $1.6 billion in late 2023. Despite a few startups scaling revenues to under $100 million, the revenue gap between OpenAI and other AI companies remains significant.

This disparity raises questions about the true end-user value of AI products outside of widely used applications like ChatGPT. For AI companies to secure long-term consumer investment, they must deliver substantial value akin to popular services like Netflix and Spotify.

The Growing Revenue Gap: From $125B to $600B

Cahn’s latest analysis indicates that even with optimistic revenue projections for tech giants like Google, Microsoft, Apple and Meta, each generating $10 billion annually from AI-related ventures, and additional contributions from companies like Oracle, ByteDance, Alibaba, Tencent, X, and Tesla, the gap has grown fourfold.

This highlights the immense challenge in aligning AI infrastructure investments with actual revenue generation, according to Cahn.

NVIDIA’s announcement of the B100 chip, which offers 2.5 times better performance at only 25% more cost, is expected to drive a final surge in demand for NVIDIA chips. This chip represents a significant cost-performance improvement over the H100, potentially leading to another supply shortage as companies scramble to acquire B100s.

Cahn draws parallels between AI infrastructure build-outs and historical investments in physical infrastructure like railroads.

Unlike physical infrastructure, which often holds intrinsic value and monopolistic pricing power, GPU data centers face intense competition, turning GPU computing into a commoditized service. This competitive landscape, coupled with the rapid depreciation of semiconductor technology, poses a number of risks, including capital incineration similar to speculative investment frenzies in the past.

He writes: “Even in the case of railroads—and in the case of many new technologies—speculative investment frenzies often lead to high rates of capital incineration. The Engines that Moves Markets is one of the best textbooks on technology investing, and the major takeaway—indeed, focused on railroads—is that a lot of people lose a lot of money during speculative technology waves. It’s hard to pick winners, but much easier to pick losers (canals, in the case of railroads).”

Where Cahn’s Assumptions May Be Wrong

It’s important to note that Cahn has built his argument on solid ground — but it’s still built on several assumptions and rests on predictions that are anything but certain in this dynamic AI-powered era. First, nearly every day we are seeing new AI products and services and market demand for those services could accelerate more rapidly than anticipated, potentially closing the projected $600 billion gap faster than expected. Rapid technological advancements and new, unforeseen revenue streams from innovations in AI applications could also mitigate the gap. Along with that, we might see improvements to AI infrastructure efficiency, an emergence of new business models and favorable regulatory changes — all of which could drive higher-than-expected revenue growth.

The competitive landscape in the AI sector is dynamic and could shift, impacting revenue forecasts. Broader economic factors and variations in consumer adoption rates of AI technologies might further influence the revenue gap. Cahn’s emphasis on GPU costs may overlook other critical aspects of AI infrastructure and operational expenses, such as software and talent.

Ultimately, Cahn’s analysis provides valuable insights, but it may not fully account for the complexities and potential future developments in the AI ecosystem. Althogh, to Cahn’s point, it would require dramatic shifts in patterns to make up this gap in a reasonable time frame.

Winners and Losers in the AI Era

Having argued that Cahn’s piece might not be completely sound, this isn’t meant to be a doomer manifesto. In fact, he suggests this rapid growth might be normal, if not healthy, in the long run.

Cahn writes: “Speculative frenzies are part of technology, and so they are not something to be afraid of. Those who remain level-headed through this moment have the chance to build extremely important companies. But we need to make sure not to believe in the delusion that has now spread from Silicon Valley to the rest of the country, and indeed the world. That delusion says that we’re all going to get rich quick, because AGI is coming tomorrow, and we all need to stockpile the only valuable resource, which is GPUs.”

He concludes: “In reality, the road ahead is going to be a long one. It will have ups and downs. But almost certainly it will be worthwhile.”