Insider Brief

- A team of researchers published an AI risk categorization study that highlights significant global disparities in AI regulation, varying by region and technology.

- Researchers developed a four-tiered taxonomy to standardize AI risk assessments across governmental and corporate policies, identifying 314 unique risk categories.

- The findings emphasize the need for a more harmonized global approach to AI regulation to ensure consistent safety and ethical standards.

Artificial Intelligence (AI) developers and policymakers face a hard slog determining a global approach to AI regulation because the current frameworks vary widely by region and technology, according to a new study.

The researchers, which unveiled a comprehensive framework to categorize and evaluate risks associated with AI systems, suggest the incongruities across nations and technology could lead to inconsistent safety and ethical standards..

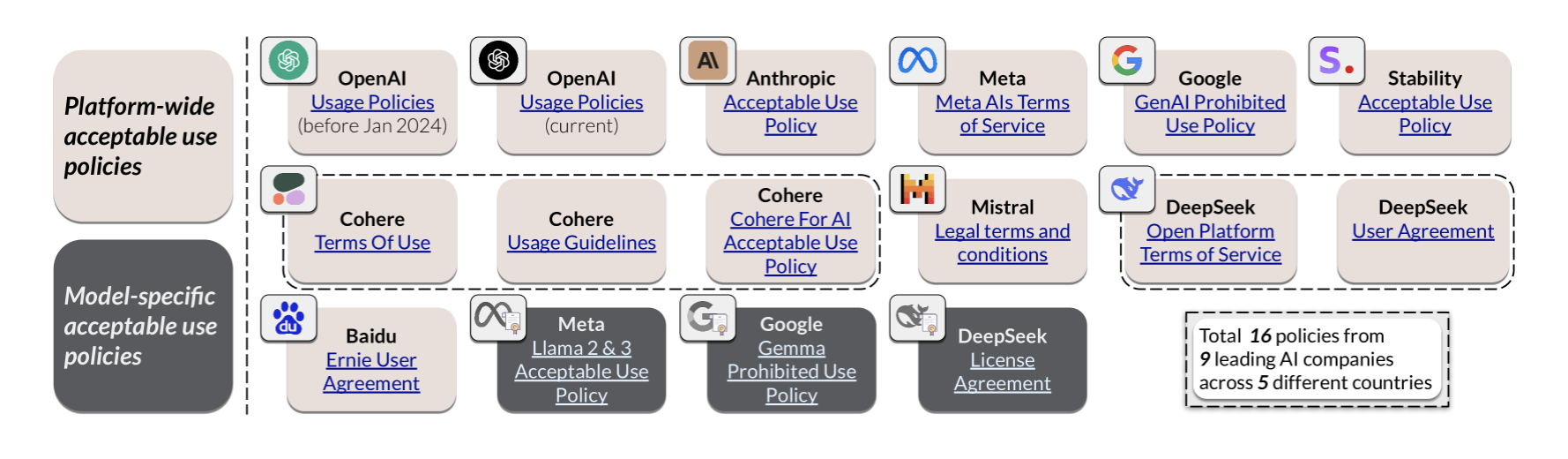

The study, published on the pre-print server ArXiv, analyzes AI-related risks as defined by governmental regulations in the European Union (EU), United States (US), and China, alongside policies from 16 major corporations. The findings reveal a fragmented landscape where AI regulation varies significantly depending on geographical location and the specific AI technologies in question.

The research provides a four-tiered taxonomy that consolidates 314 unique risk categories. These categories are meticulously organized into broader classifications, including System & Operational Risks, Content Safety Risks, Societal Risks, and Legal & Rights Risks. The goal is to establish a unified framework that can standardize AI safety evaluations across both governmental and corporate sectors, addressing a growing need for consistency in an era where AI technologies are rapidly advancing.

A Fragmented Regulatory Landscape

One of the most striking revelations of the study is the disparity in AI risk assessment and regulation across different countries. The EU, known for its stringent regulatory stance on digital technologies, emphasizes a broad spectrum of risks, particularly those related to privacy, data protection, and societal impact. The EU’s approach is characterized by a precautionary principle, aiming to mitigate potential harms before they occur. This contrasts sharply with the US, where AI regulation is less centralized and more industry-driven. The US framework tends to focus more on operational risks and the immediate functional safety of AI systems, often leaving broader societal concerns to be addressed through corporate self-regulation.

China, on the other hand, presents a unique approach, combining stringent government oversight with a focus on maintaining social stability. The Chinese regulatory framework heavily emphasizes risks related to content safety and information control, reflecting the country’s broader governance strategy. This includes strict regulations on AI-generated content, with particular attention to the potential for such content to disrupt social harmony or challenge governmental authority.

Corporate Policies: Diverse and Inconsistent

The study also sheds light on the diversity of corporate AI policies, which further complicates the regulatory landscape. The 16 corporate policies analyzed range from tech giants like Google and Microsoft to smaller, specialized AI firms. These policies vary widely in their coverage of AI risks, with some companies adopting comprehensive frameworks that align closely with governmental regulations, while others focus narrowly on operational risks or specific AI applications.

For instance, tech behemoths like Google have established detailed policies that address a wide array of risks, including ethical concerns, bias in AI algorithms and potential societal impacts. In contrast, smaller firms, particularly those specializing in niche AI applications, often focus their policies on technical and operational risks, leaving broader societal implications largely unaddressed.

This inconsistency in corporate approaches underscores the need for a more standardized framework, one that can bridge the gap between differing governmental regulations and the varied risk assessments seen in the private sector.

A Unified Framework for AI Risk Assessment

The researchers behind the study propose their four-tiered taxonomy as a solution to this fragmented landscape. By categorizing AI risks into System & Operational Risks, Content Safety Risks, Societal Risks, and Legal & Rights Risks, the taxonomy provides a comprehensive structure that can be applied across different regulatory and corporate environments.

System & Operational Risks encompass technical issues that could lead to AI system failures, such as software bugs or hardware malfunctions. Content Safety Risks pertain to the generation and dissemination of harmful or misleading content by AI systems, a category of particular concern in China. Societal Risks address the broader impact of AI on society, including issues of bias, inequality, and the potential for AI to exacerbate existing social divides. Lastly, Legal & Rights Risks cover potential violations of laws and regulations, including those related to privacy, data protection, and intellectual property.

Implications for the Future of AI Regulation

The study’s findings have significant implications for the future of AI regulation. As AI technologies continue to evolve, the need for a standardized, globally recognized framework for assessing and mitigating risks becomes increasingly urgent. The disparities highlighted in the study suggest that without such a framework, the global AI landscape will remain fragmented, with varying levels of safety and oversight depending on geographical location and the specific AI technologies in use.

The researchers also call into question the effectiveness of current regulatory approaches, particularly in the US, where the reliance on industry-driven standards may leave significant risks unaddressed. The EU’s comprehensive but precautionary approach may serve as a model for other regions, although its implementation on a global scale presents its own challenges, particularly in countries with differing governance philosophies, such as China.

As the debate over AI regulation continues, the researchers hope the taxonomy proposed in this study offers a potential pathway toward greater consistency and safety in AI development and deployment. However, achieving global consensus on AI risk management will require ongoing dialogue and collaboration between governments, corporations, and other stakeholders.

The researchers involved in the study include: Yi Zeng from Virginia Tech, Kevin Klyman from Stanford University and Harvard University, Andy Zhou from Lapis Labs and the University of Illinois Urbana-Champaign, Yu Yang from the University of California, Los Angeles, Minzhou Pan from Northeastern University, Ruoxi Jia from Virginia Tech, Dawn Song from the University of California, Berkeley, Percy Liang from Stanford University, and Bo Li from the University of Chicago.