- A report by Epoch AI projects that AI computational power could scale 10,000 times by 2030, provided key challenges are addressed.

- Power availability, chip manufacturing capacity, data scarcity, and the “latency wall” are identified as major constraints to achieving this growth.

- Realizing this scaling will require hundreds of billions of dollars in investment, with substantial uncertainty about whether such levels will be pursued.

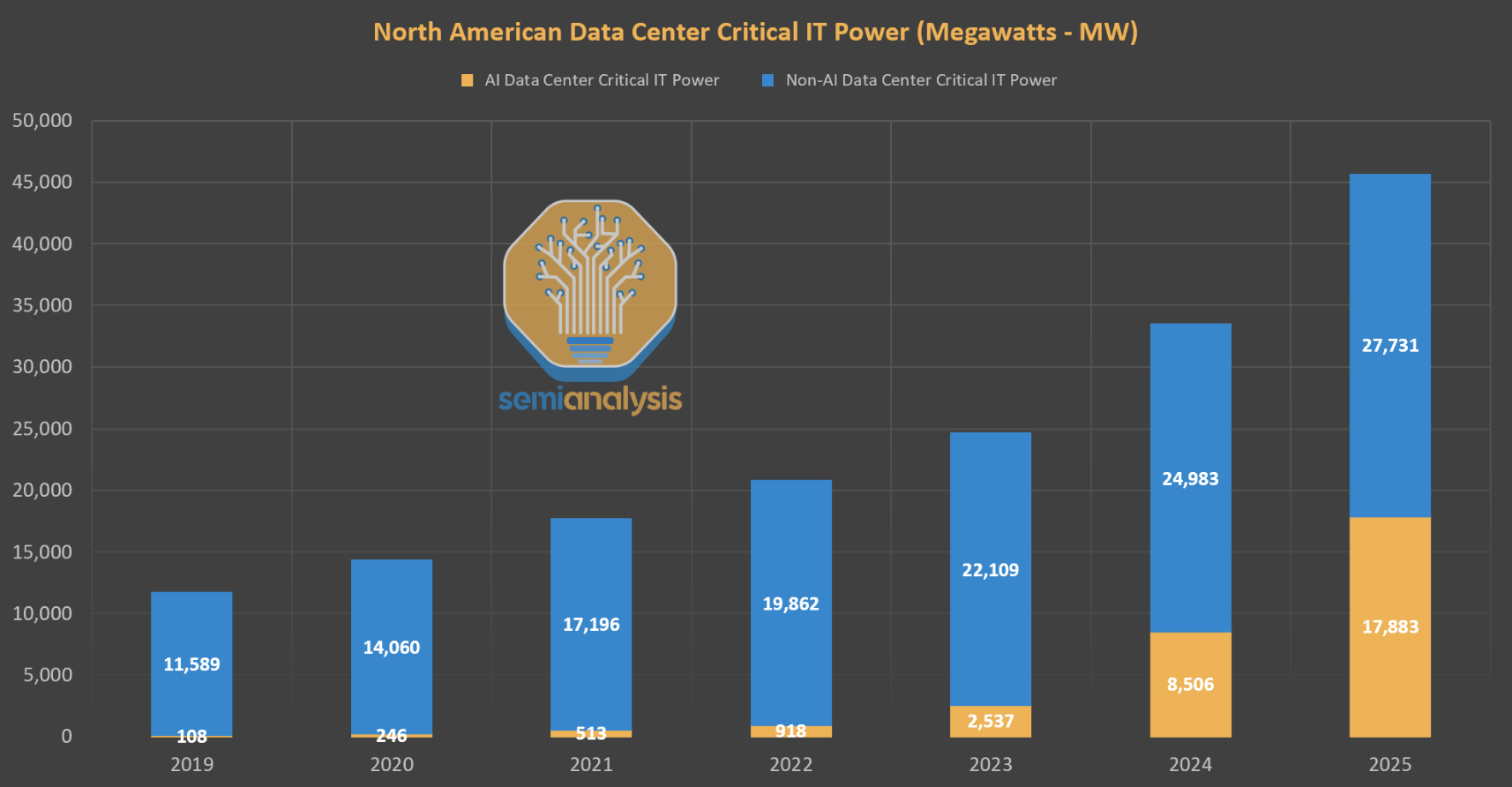

- Image: Reported and planned total installed IT capacity of North America data centers via SemiAnalysis’ data center industry model.

Artificial intelligence (AI) could see a staggering 10,000-fold increase in computational power by the end of the decade, according to a new report by Epoch AI. This ambitious projection is grounded in the current trajectory of AI model training, which has been scaling at a rate of 4x per year. However, achieving this growth will require overcoming significant challenges in power availability, chip manufacturing, data scarcity, and computational speed.

Epoch AI’s report, titled “AI Scaling Projections Through 2030,” explores whether the rapid expansion of AI training can continue at its current pace. The analysis considers four primary bottlenecks: power constraints, chip manufacturing capacity, data availability, and the so-called “latency wall,” which refers to the inherent delays in AI training computations.

Power Constraints

The report highlights power availability as one of the most pressing concerns. AI model training is an energy-intensive process, and scaling up will require substantial increases in power capacity. Data center campuses capable of generating 1 to 5 gigawatts (GW) of power could support training runs ranging from 1e28 to 3e29 floating-point operations (FLOP), according to the report. By comparison, GPT-4, one of the most advanced AI models today, was likely trained using around 2e25 FLOP, according to the report.

“Geographically distributed training could tap into multiple regions’ energy infrastructure to scale further,” the report notes. “Given current projections of US data center expansion, a US distributed network could likely accommodate 2 to 45 GW, supporting training runs from 2e28 to 2e30 FLOP.”

The authors emphasize that achieving such levels of power would likely require building new power stations and expanding the electricity grid to meet the enormous demand.

“Beyond this, an actor willing to pay the costs of new power stations could access significantly more power, if planning three to five years in advance,” the researchers add.

Chip Manufacturing Capacity

The production of AI chips, particularly GPUs, is another critical factor. Current projections indicate that the chip manufacturing industry could produce enough GPUs to power a 9e29 FLOP training run by 2030. This estimate assumes that the industry can continue expanding its production capacity at a rate of 30% to 100% per year, in line with recent trends.

From the report: “AI chips provide the compute necessary for training large AI models. Currently, expansion is constrained by advanced packaging and high-bandwidth memory production capacity. However, given the scale-ups planned by manufacturers, there is likely to be enough capacity for 100 million H100-equivalent GPUs to be dedicated to training.”

Despite these optimistic projections, the report cautions that there is significant uncertainty surrounding these estimates, particularly due to the unknowns in advanced packaging and memory production. The potential range of outcomes is broad, with estimates ranging from 20 million to 400 million H100-equivalent GPUs, corresponding to 1e29 to 5e30 FLOP.

Data Scarcity and the Latency Wall

Data availability also poses a challenge. Training large AI models requires vast datasets, and the report projects that the indexed web could increase by 50% by 2030, with multimodal learning from image, video and audio data tripling the data available for training. However, even with these increases, data scarcity could still constrain AI scaling.

The “latency wall,” a fundamental speed limit imposed by unavoidable delays in AI training computations, is another potential bottleneck. The report estimates that cumulative latency on modern GPU setups would cap training runs at 3e30 to 1e32 FLOP, depending on the extent to which AI developers can overcome these limitations.

Economic Considerations

While the report focuses primarily on the technical feasibility of AI scaling, it also touches on the economic implications. Achieving the projected growth will require substantial investment, potentially amounting to hundreds of billions of dollars over the coming years.

“Whether AI developers will actually pursue this level of scaling depends on their willingness to invest heavily in AI expansion,” the report states. “While we briefly discuss the economics of AI investment later, a thorough analysis of investment decisions is beyond the scope of this report.”

Epoch AI is a research institution focused on advancing AI technologies. Read the entire report here.