Insider Brief:

- Microsoft Research introduced Muse, a generative AI model that simulates video game environments and player actions, developed with Xbox Game Studios’ Ninja Theory.

- Muse predicts gameplay sequences by learning from real human gameplay data, generating visuals and controller inputs that follow game physics and mechanics.

- The WHAM Demonstrator enables developers to experiment with Muse, modify gameplay elements, and explore AI-driven game design possibilities.

- Microsoft open-sourced Muse via Azure AI Foundry, publishing findings in Nature and highlighting its consistency, diversity, and persistency in generating gameplay.

PRESS RELEASE — In a recent announcement, Microsoft Research introduced Muse, a generative AI model designed to simulate video game environments and player actions. Developed by the Game Intelligence and Teachable AI Experiences teams in collaboration with Xbox Game Studios’ Ninja Theory, Muse is the first World and Human Action Model (WHAM)—a system capable of generating both game visuals and controller inputs to predict gameplay sequences.

How Muse Works

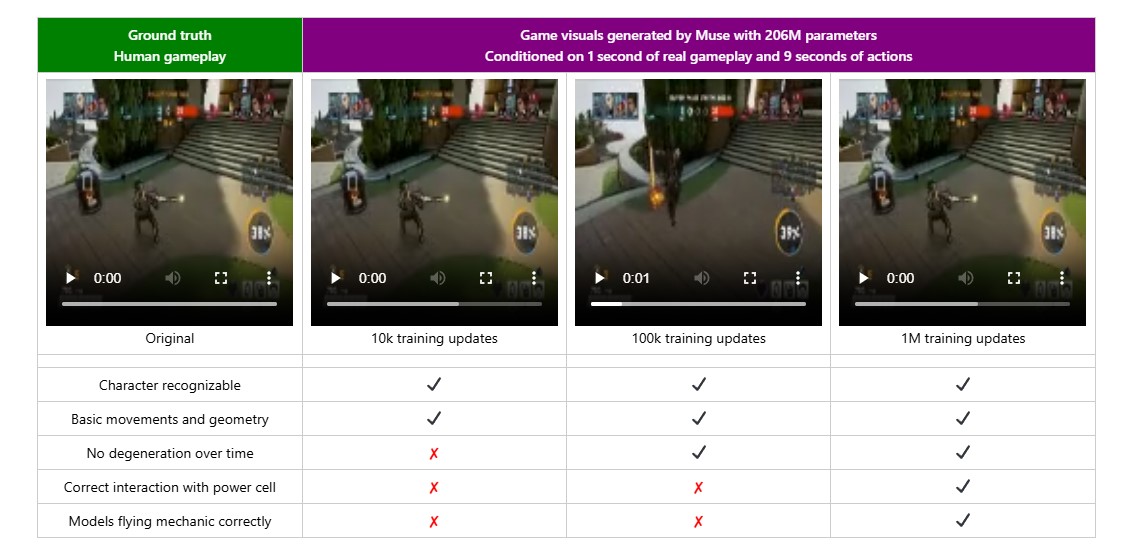

Muse learns from real human gameplay data and can generate sequences that closely follow the game’s actual physics and mechanics. It operates in “world model mode,” meaning it predicts how a game will unfold based on an initial prompt—such as a short gameplay clip or a sequence of controller actions. This allows it to create multi-minute-long gameplay sequences that remain consistent with the original game environment.

The model was trained on data from Bleeding Edge, a 4v4 online multiplayer game developed by Ninja Theory. Training involved more than 1 billion images and controller actions, equating to over seven years of gameplay. Muse can generate diverse gameplay variations, from different movement paths to visual elements like character hoverboards.

Towards AI for Game Development

Muse is part of a broader effort to integrate AI into game design and support human creativity. Researchers designed the system to help game developers explore ideas, test mechanics, and generate new gameplay sequences without manual programming. A key focus of the project was ensuring the model accurately represents game physics, such as maintaining correct character movement and interactions with objects.

The WHAM Demonstrator, an interactive tool, allows users to experiment with Muse’s capabilities. Developers can use it to prompt Muse with an image or gameplay sequence, then observe different possible evolutions of the game. Notably, Muse also supports “persistency”, meaning it can retain user modifications—such as adding a new character to a scene—and generate plausible gameplay variations from that modified state.

Open-Source Release and Research Impact

Microsoft has open-sourced Muse’s model weights, sample data, and the WHAM Demonstrator via Azure AI Foundry, allowing other researchers to build on the work. The team’s findings are also published in Nature, highlighting the model’s performance in key areas:

- Consistency – Ensuring that generated gameplay follows real-world physics and game rules.

- Diversity – Generating multiple valid gameplay variations from the same initial conditions.

- Persistency – Maintaining introduced modifications throughout gameplay sequences.

Muse’s development involved large-scale AI training, initially on V100 GPUs and later scaled up to H100 GPUs, improving model resolution and fidelity. The project also engaged game developers early in the process, ensuring the technology aligns with industry needs.

Future Applications

The introduction of Muse signals a shift in how AI can assist in game development, potentially influencing areas such as level design, AI-driven playtesting, and automated content generation. While Muse was trained on Bleeding Edge, similar models could be adapted to different games, providing developers with a powerful tool for gameplay ideation and experimentation.