Insider Brief:

- Perplexity released R1 1776, an open-source, post-trained version of DeepSeek-R1, designed to provide unbiased and factual responses.

- The model removes censorship by addressing 300 identified sensitive topics and training on a curated dataset using Nvidia’s NeMo 2.0 framework.

- R1 1776 preserves reasoning abilities while ensuring responses remain accurate, with extensive evaluations confirming its performance against leading LLMs.

- Developers can access R1 1776 via Hugging Face or the Sonar API, enabling fact-based, uncensored AI interactions for various applications.

PRESS RELEASE — Perplexity has announced the open-source release of R1 1776, a post-trained version of the DeepSeek-R1 large language model (LLM), designed to provide unbiased, accurate, and factual information. The model weights are now available on Hugging Face, and developers can also access it through the Sonar API.

Why R1 1776 Was Developed

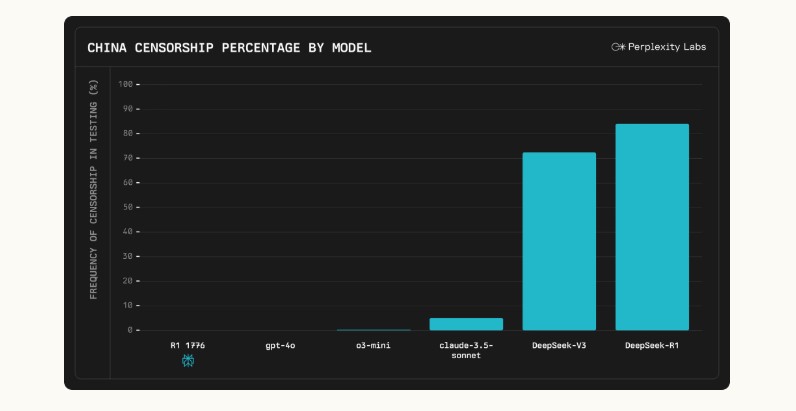

The DeepSeek-R1 model, while achieving strong performance in reasoning tasks, was restricted in its ability to address sensitive topics. The model often defaulted to censored responses aligned with Chinese government narratives, limiting its usefulness in open discourse. To address this, Perplexity focused on post-training R1 to remove these biases while preserving the model’s original reasoning capabilities.

How Perplexity Removed Censorship

Perplexity’s team identified 300 censored topics and developed a multilingual censorship classifier to detect and extract relevant queries from existing datasets. They curated a 40,000-prompt dataset that ensured factual responses without containing personally identifiable information (PII). The team then trained R1-1776 using an adapted version of Nvidia’s NeMo 2.0 framework, ensuring that censorship was removed without degrading the model’s ability to perform complex reasoning tasks.

Key Improvements in R1 1776

- Full Decensoring – Unlike the original DeepSeek-R1, the new model does not avoid or evade responses to politically sensitive or controversial topics.

- Preserved Reasoning – The post-training process maintained the model’s math, logic, and chain-of-thought reasoning abilities.

- Extensive Evaluations – Perplexity tested R1 1776 against a multilingual evaluation set of 1,000+ censored topics, using both human annotators and AI judges to measure response quality. The model was also benchmarked against top-performing LLMs to ensure its accuracy.

Previously, DeepSeek-R1 would avoid answering questions about Taiwan’s independence and instead return state-approved talking points. R1 1776, however, generates detailed, unbiased responses, outlining how geopolitical instability in Taiwan could disrupt Nvidia’s supply chain, investor sentiment, and global semiconductor markets.

Open-Source Release and Accessibility

Perplexity has made R1 1776 available for download on Hugging Face, allowing researchers and developers to further refine and expand its capabilities. The model is also accessible via the Sonar API, enabling integration into applications that require fact-based, uncensored AI interactions.

With this release, Perplexity intends to set a new standard for open, unbiased AI models, ensuring that users receive factual, unrestricted information across all domains.