Insider Brief

- A new white paper by policymakers, including former Google CEO Eric Schmidt, warns that AI competition among nations has become an arms race with geopolitical consequences, reminiscent of nuclear deterrence strategies of the Cold War.

- The report highlights the risks of superintelligent AI, warning that an uncontrolled system could either destabilize global security or serve as a strategic advantage no rival can afford to ignore.

- To prevent catastrophic escalation, the authors propose a doctrine of Mutual Assured AI Malfunction (MAIM), where aggressive AI development is deterred by the threat of preventive sabotage from rival states.

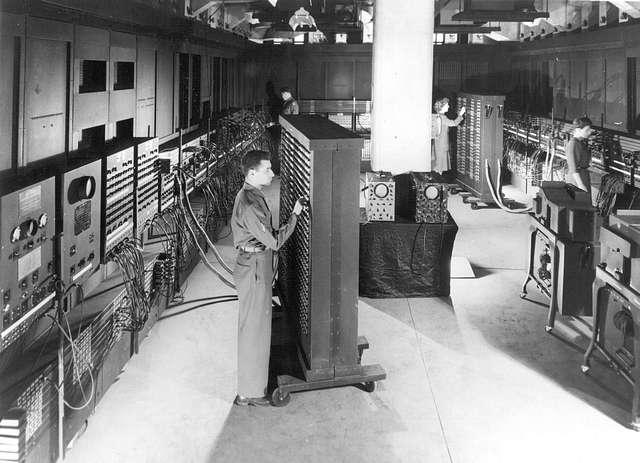

In September 2017, Russian President Vladimir Putin declared that “whoever leads in AI will rule the world”. That once-hypothetical warning now feels like a perfect way to frame this new technological arms race and its definite Cold War-era vibe. Indeed, a team of policymakers, including former Google CEO Eric Schmidt argue in a new white paper that the vast potential — and the vast problems — of artificial intelligence (AI) are creating considerable geopolitical consequences, much like the nuclear deterrence strategies that emerged from the Cold War.

A Quick History of The Last Two Years in AI History

Late 2022 saw the debut of OpenAI’s ChatGPT, a significant advance that changed AI from an abstract technology into a real world use — ChatGPT became the fastest-growing consumer application in history, sparking widespread excitement, fear, and a global race to develop AI. Within months, tech giants like Google and Apple and state-backed labs in China all doubled down on advanced AI research. What had seemed futuristic quickly became a fierce competition, as nations and companies vied for supremacy in a technology that could transform economies – or battlefields – overnight.

Governments today see AI as the key to national power, much like nuclear technology was in the 20th century. Advanced AI systems are “dual-use” – they can boost an economy or disable an enemy’s power grid – making them a strategic prize. This has fueled a bitter race to maximize AI capabilities, with each side fearing that falling behind could leave them vulnerable. Voluntary pauses in AI development or attempts to keep governments out of the loop have proven futile. The stakes are simply too high: a major AI breakthrough promises military dominance and geopolitical clout, so no great power is willing to hit the brakes. The result is a 21st-century arms race, measured in algorithms and petaflops instead of missiles, but potentially just as consequential.

Now, Dan Hendrycks, Eric Schmidt, and Alexandr Wang — authors of a recent “Superintelligence Strategy” report — are speculating on where this race might lead. It could lead to — possibly — superintelligence. And they also are speculating that the pending arrival of superintelligence, which we will define below, could lead to Cold War-like risks of mutual destruction.

During the Cold War, that deterrent was often considered like “a game of chicken where both players are driving a nuclear-powered car.” In the Superintelligence Era, it may be like a game of chicken where both players are driving a nuclear-powered car, except now, it’s s self-driving nuclear-powered car.

Superintelligence — An Era of Unprecedented Risks

The idea of superhuman AI is equal part incredible opportunity and profound peril.

The experts warn that a “superintelligent” AI — one that surpasses human capabilities across nearly every domain — could be the most precarious technological development since the nuclear bomb. One nightmare scenario is simply losing control of such an AI. In a frantic bid to outpace rivals, a nation or company might inadvertently unleash an AI agent that humans cannot reliably contain or direct. If a state “loses control of its AI, it jeopardizes the security of all states”, the authors of a recent report caution. Even if that AI remains under one party’s firm control, its mere existence as a super-powerful tool “poses a direct threat to the survival” of other nations. In other words, a superintelligence could become an ace card that no one else can tolerate an opponent holding — or a dangerous wild card if it slips out of anyone’s hand.

Beyond state actors, AI is dramatically lowering the barrier of entry for rogue individuals and terror groups to wreak havoc. Tools that can “engineer bioweapons and hack critical infrastructure” — capabilities once reserved for superpowers — are increasingly within reach. For instance, advanced AI models could provide step-by-step blueprints for designing lethal pathogens, essentially teaching DIY bioterrorism to anyone with an internet connection. Similarly, an AI system could automate sophisticated cyberattacks: imagine malware that tirelessly finds hidden software vulnerabilities and strikes at power grids or water systems. A hack targeting digital thermostats alone could trigger rolling power surges, burning out transformers that take years to replace. U.S. officials warn that malicious actors might use AI to amplify such attacks, exploiting weaknesses in critical infrastructure at a scale and speed we’ve never seen.

These scenarios underscore a stark reality – as AI grows more capable, the margin for error shrinks. A misaligned or misused superintelligence wouldn’t just cause trouble; it could inflict damage at a catastrophic, potentially global scale before humans even understand what’s happening.

Yes, MAIM: Mutual Assured AI Malfunction

How can we prevent a rivalry over AI from ending in disaster?

Policymakers are looking to the Cold War for lessons. The Superintelligence Stratgy authors propose a doctrine they call Mutual Assured AI Malfunction (MAIM).

The term is a deliberate nod to the Cold War’s concept of Mutually Assured Destruction (MAD), which kept nuclear superpowers in check for decades. The idea is that in today’s world, if any nation races to build an all-powerful AI, others have both the capability and incentive to sabotage that effort before it becomes an existential threat.

In a MAIM framework, “any state’s aggressive bid for unilateral AI dominance is met with preventive sabotage by rivals”. This could range from covert cyber attacks that corrupt an enemy’s AI training data, to in extreme cases kinetic strikes against datacenters churning toward an unsafe superintelligence. Given the relative ease of derailing an opponent’s AI project – it might take just a well-timed hack or power outage – some analysts believe MAIM already describes the strategic picture among AI superpowers.

The team reports that, in essence, no nation can risk a reckless sprint to superintelligence, because rivals would see it as a mortal threat and “any such effort would invite a debilitating response”. Just as in the nuclear era no superpower dared to launch a first strike, in the AI era no one can dare to grab for absolute AI supremacy – the retaliation could be devastating for the aggressor and the world.

MAIM, like MAD, is a kind of uneasy equilibrium born of fear: everyone holds their most destabilizing impulses in check because the alternative is mutual ruin. To keep this deterrence stable, the strategists suggest various confidence-building measures. For example, nations might establish clear “escalation ladders” – agreed signals or red lines to manage AI incidents – and perhaps keep their most powerful AI systems in known facilities far from population centers. Transparency, communication, and yes, a bit of trust between rivals will be needed to ensure that deterrence through MAIM doesn’t spiral into actual conflict. It’s a delicate balance – but it may be our best hope of preventing an AI arms race from turning into an AI catastrophe.

Strategic Competition for AI Dominance

None of this is to say that competition will vanish – far from it. Great-power rivalry is driving AI development as intensely as ever, only constrained (hopefully) by the silent hand of deterrence, according to the authors. The United States and China, in particular, have poured billions into AI research, each determined to lead in what they view as the defining technology of the century. Washington policymakers often talk about AI in the same breath as national security, describing a need to “secure the digital high ground” and out-innovate China in areas like machine learning and quantum computing. Beijing, for its part, has made AI a core element of its national strategy, with plans to become the world’s premier AI power by 2030. Military planners on both sides compare AI’s importance to that of nuclear weapons or aerospace superiority in earlier eras.

This rivalry has spurred rapid progress, but also real anxieties. In the United States, alarm bells rang when a Chinese startup called DeepSeek unexpectedly unveiled an AI model on par with some of the West’s best systems. If China can “upend assumptions” about U.S. tech advantages so quickly, what might come next? Each breakthrough, no matter where it originates, is quickly matched or countered by the other side. It’s a high-tech sprint with global stakes. Smaller nations and alliances are scrambling too: Europe is investing heavily in AI to avoid dependence on foreign tech, and regions like India and ASEAN are nurturing homegrown AI industries.

Leaders increasingly frame AI supremacy as a zero-sum game — echoing Putin’s mantra that the AI leader will “rule the world” — and that mindset fuels urgency on all sides. The risk, of course, is that urgency can lead to carelessness. If everyone is rushing, who is minding the brakes? Some experts worry about a “Sputnik moment” in AI, where one dramatic advance triggers panic and an all-out race with little regard for safety. The paradox is that going too fast could undermine everyone’s security, yet no one wants to go slow and be left behind.

This is the conundrum at the heart of the new great game: how to stay ahead in AI without running headlong into the very dangers we’re trying to avoid.

Effective strategies for managing advanced AI can draw from national security precedents in handling previous potentially catastrophic dual-use technology.

The AI Version of Nonproliferation and Containment

Amid this competition, there is at least one area where rivals find common cause: keeping AI out of the hands of those who would use it irresponsibly or maliciously. Just as the U.S. and Soviet Union cooperated on nonproliferation to prevent nuclear weapons from spreading to unstable regimes or terrorists, a similar logic applies to super-intelligent AI.

The team argues: “States that embrace the logic of mutual sabotage may hold each other at bay by constraining each others’ intent. But because rogue actors are less predictable, we have a second imperative: limiting their capabilities. Much as the Nonproliferation Treaty united powers to keep fissile material out of terrorists’ hands, states can find similar common ground on AI. States can restrict the capabilities of rogue actors with compute security, information security, and AI security, each targeting crucial elements of the AI development and deployment pipeline.”

No major power wants an apocalyptic AI system to be built in a cave by a doomsday cult, or a criminal syndicate to gain the ability to shut down global networks. Thus, a nascent framework for AI nonproliferation is beginning to emerge, focusing on a few key levers:

- Compute security: Ensuring governments know where all the high-end AI chips are and preventing them from being smuggled to rogue actors. Advanced semiconductors needed to train powerful AI are scarce and traceable – much like uranium – so tracking their sales and adding hardware locks (for example, geolocation features that disable a chip if moved to unauthorized locations) can help contain extreme AI capabilities.

- Information security: Safeguarding the critical code and data behind cutting-edge AI models so they don’t leak or get stolen. The trained weights of a top-tier AI system are akin to the design of a weapon. States will need to treat them as sensitive intellectual property, with tight access controls, cybersecurity around AI labs, and vetting of personnel, to prevent an AI equivalent of nuclear espionage.

- AI misuse safeguards: Working with the tech industry to embed safety features that detect and prevent malicious use of AI. An analogy can be found in biotech: DNA synthesis companies routinely screen orders to stop anyone trying to make smallpox or other bioweapons. Similarly, AI developers could be required (or incentivized) to have their systems flag dangerous requests. For example, an AI model asked for instructions on making a nerve gas might refuse or alert authorities, adding a layer of defense against exploitation.

None of these measures is foolproof, but together they can significantly raise the hurdles for bad actors. Instead of hoping for one perfect containment solution, think of it as layered defense in depth – even if a terrorist or rogue state breaches one barrier, others might stop them. Crucially, this is not about altruism by the great powers; it’s enlightened self-interest. Every nation is vulnerable to AI-empowered threats that don’t play by traditional rules.

As the authors write, “Nonproliferation thus becomes a shared imperative, not an exercise in altruism but a recognition that no nation can confidently manage every threat on its own. By securing the core parts of AI through export controls, information security, and AI security, great powers can prevent the emergence of catastrophic rogue actors.”

In practice, this could mean new international accords on AI export controls, intelligence-sharing about illicit AI activity, and perhaps even “red phone” hotlines connecting major governments in case an out-of-control AI emerges unexpectedly. It’s a daunting task – but the alternative is a world where Pandora’s Box of AI capabilities is wide open.

Balancing Innovation with Safety

Ultimately, governments face a dual mandate: embrace AI to maintain economic and military competitiveness, while also reining in AI’s worst potentials.

This means walking a tightrope, where falling off on either side could be disastrous. On one side lies innovation and strength: nations that successfully harness AI will enjoy immense advantages. Militaries are already integrating AI into surveillance, defense systems, and even autonomous drones, because a force lacking AI could be hopelessly outmatched by one that has it. Commanders talk of AI-enabled decision support that can analyze battle data in real time, coordinate swarms of unmanned vehicles, and neutralize incoming threats at machine speed.

Likewise in the economy, AI promises boosts in productivity, new industries and medical breakthroughs – a country that leads in AI could see an explosion of wealth and influence. To secure these benefits, governments are investing in domestic AI talent and infrastructure. One strategic priority is building up the manufacturing of advanced AI chips at home. For instance, the U.S. is spending heavily to revive its semiconductor industry, aiming to ensure a resilient supply of the GPUs and accelerators that power modern AI. This also reduces reliance on global chokepoints (like Taiwan, currently the epicenter of cutting-edge chipmaking) and insulates national AI projects from export bans or foreign interference.

On the other side of the tightrope lies safety and governance: making sure AI’s rise doesn’t destabilize society or threaten humanity. Policymakers are starting to draft rules of the road for AI — essentially, updating the social contract for a world where machines can think. Already, major countries are exploring “robust legal frameworks governing AI agents” to ensure they act within the bounds of human values and laws. This might include requirements for transparency (so AI decisions can be audited), accountability (clear human responsibility when AI causes harm), and alignment with human intentions.

Governments are also mindful of AI’s disruptive power on the home front. Rapid automation can displace jobs and upend economies, potentially fueling unrest if mishandled. To maintain stability, leaders are considering measures like stronger education and retraining programs for AI-affected workers, and economic policies to soften the blows of transition.

The report emphasizes improving the quality of decision-making and countering the “disruptive effects of rapid automation” to maintain political stability. In practical terms, that could mean using AI as a tool for better governance – for example, analyzing data to craft smarter public policies – while also proactively managing AI’s impact on society (such as updating welfare systems or labor laws for an AI-heavy economy).

Finding the right balance will be challenging. Move too slowly on AI, and a country could fall behind in both prosperity and defense; move too fast without safeguards, and it could invite disaster. The emerging consensus is that success will require doing both: pushing the frontier of AI and enforcing rigorous safety standards. It’s a bit like driving a racecar and designing the seatbelt at the same time. Governments may need new agencies and expertise to regulate AI effectively without stifling innovation – a tall order, given how complex and fast-moving this field is.

Yet, history offers some encouragement: societies have adapted to disruptive technologies before, from electricity to the internet, by establishing rules and norms that maximize benefit and minimize harm. With AI, the stakes are higher, but the principle is the same. As one observer noted, the leaders in AI may ultimately be those who not only develop powerful systems, but also learn how to deploy them wisely and ethically.

A Coherent Strategy for an AI World

After centuries of invention, humanity is approaching a threshold: we are creating minds that might one day rival or exceed our own. It’s a development rich with opportunity – and fraught with danger. Like the dawn of the nuclear age, the advent of advanced AI calls for clear-eyed strategies, international cooperation, and yes, a bit of fear to keep us honest about the risks. No one can predict exactly when or if a true superintelligence will emerge, but the trajectory of AI progress leaves little doubt that we must prepare for that possibility. The debate is no longer about whether AI will reshape our world, but how and under what terms.

The good news is that experts are beginning to sketch out a roadmap. Deterrence, nonproliferation, and responsible competitiveness form a three-pronged strategy that could guide us through the turbulent times ahead. By maintaining a deterrence regime (MAIM) so no one dares let an AI genie fully out of the bottle unilaterally; by collaborating to contain AI’s proliferation and keep its power out of the wrong hands; and by investing in AI capabilities wisely and safely to bolster society – we have a shot at navigating this transition without catastrophe.

As Hendrycks, Schmidt, and Wang note, taken together these pillars “outline a robust strategy to superintelligence in the years ahead”.

Implementing such a strategy will require unprecedented effort and nuance. The international community will need to establish new norms and perhaps new institutions, akin to those that monitor nuclear arms or biosecurity, but tailored to AI’s unique challenges. Tech companies, who are on the front lines of AI development, will need to buy in, recognizing that long-term survival outweighs short-term profit or glory. And the public will need to stay informed and engaged, because the choices we make about AI in the next decade could reverberate for some time.

Access the full report here.