Insider Brief

- Large language models are becoming core components of enterprise systems in 2025, with organizations adopting them to automate knowledge work, improve efficiency, and enhance decision-making across sectors.

- Enterprises face key decisions around open-source versus proprietary models, deployment architecture, customization techniques like RAG and fine-tuning, and compliance with growing AI governance standards.

- Success depends on aligning LLM adoption with internal data readiness, infrastructure capabilities, and responsible AI practices, using structured frameworks like AI Insider’s Seven-Layer AI Stack.

Large language models (LLMs) are no longer experimental tools. In 2025, they are becoming embedded in the core infrastructure of enterprise IT systems, reshaping how companies interact with data, customers and employees.

Enterprise interest in LLMs has shifted from curiosity to implementation, a trend that guided the creation of AI Insider’s Seven Layer framework. These models, capable of generating fluent text, summarizing documents, answering questions, and even writing code, are being integrated across sectors including banking, healthcare, legal services, and logistics. As the market matures, organizations are being forced to decide between dozens of models and deployment options, each with different trade-offs in security, performance, cost and flexibility.

How to Understand LLM Use in The Enterprise Context

Large language models are trained on massive amounts of text data and use statistical patterns to generate human-like language. While most consumers interact with these models through chatbots, enterprise uses are far broader. LLMs are being deployed for tasks such as automated compliance monitoring, internal knowledge search, document summarization, and customer service.

However, LLMs come with challenges. They can produce incorrect answers, known as hallucinations, and their behavior can change unpredictably over time. This makes governance, transparency and version control essential in business environments where accuracy and consistency matter.

What Are The Pros And Cons of Open-Source vs Proprietary LLMs?

The first major decision most enterprises face is whether to adopt open-source or proprietary models.

Open-source models, such as Meta’s LLaMA and the Mistral family, offer transparency and customization. Enterprises can run them on their own servers, fine-tune them with company data, and fully control their behavior. This makes them attractive to organizations with high security needs, such as financial institutions or defense contractors. However, open-source models often require more internal expertise to deploy and maintain.

Proprietary models from companies like OpenAI, Anthropic, and Google provide strong performance and ease of use. These models are typically accessed via API and come with support and regular updates. But they raise concerns about vendor lock-in, unpredictable pricing, and limited visibility into the training data and model behavior.

The decision often hinges on the company’s use case, sensitivity of the data, and in-house technical capabilities. Some businesses are adopting hybrid strategies, using open models for internal tools and proprietary models for customer-facing applications.

Deployment Options and Trade-Offs

Enterprises have several choices in how they deploy LLMs: public API, virtual private cloud (VPC), or fully on-premise.

Public APIs offer ease of integration but raise concerns about data privacy and latency. For firms handling regulated or sensitive data, a VPC deployment—where the model runs in a segregated cloud environment—may offer a middle ground. On-premise installations, while expensive and complex, offer the highest degree of control and compliance.

Key decision factors include compliance requirements (e.g., HIPAA or GDPR), latency needs, and the location of data storage. For example, firms in Europe may prioritize keeping data within EU borders, which limits the choice of providers.

How to Customize LLMs: Prompt Engineering, Fine-Tuning and RAG

Tailoring LLMs for specific business tasks can be done through three main methods: prompt engineering, fine-tuning, and retrieval-augmented generation (RAG).

Prompt engineering involves crafting precise instructions to guide the model’s behavior. This method is fast and requires no changes to the underlying model, but its effectiveness can be inconsistent.

Fine-tuning involves retraining the model on company-specific data. This provides more predictable performance but is costly and may lead to overfitting. It also creates the need to manage multiple model versions.

RAG — or Retrieval-Augmented Generation — is gaining popularity as a compromise. Instead of retraining, the model is connected to a document database. When a user asks a question, the system retrieves relevant documents and feeds them to the model. This boosts factual accuracy without altering the model itself.

Different industries are adopting different strategies. A legal firm might fine-tune a model on case law, while a manufacturer might use RAG to let an LLM access equipment manuals during troubleshooting.

Mapping Enterprise LLM Adoption to AI Insider’s Seven-Layer AI Stack Framework

Enterprises adopting LLMs can benefit from viewing their integration strategy through the lens of AI Insider’s Seven-Layer AI Stack Framework:

- Hardware & Datacenter Enablers – This foundational layer involves the physical infrastructure for AI workloads, including GPUs, networking, and data storage systems. Effective LLM deployment requires scalable and high-performance compute environments.

- Data Structure & Processing – Before model use, enterprises must ensure their data is cleaned, structured, and machine-readable. This layer is critical for enabling effective LLM performance.

- Model Development & Deployment – This includes model selection, fine-tuning, and deploying to production. It aligns with decisions around open-source vs proprietary models and strategies like RAG.

- Inference – Once deployed, LLMs must respond to real-time user inputs with acceptable speed and accuracy. Optimizing inference is key for enterprise usability.

- Orchestration – LLM applications often involve multiple tools. Orchestration systems manage workflows, prompt routing, and integrations with enterprise software.

- Tooling – This layer provides MLOps frameworks and development environments necessary to iterate, monitor, and manage LLM systems.

- Security & Governance – With sensitive data in play, enterprises must enforce access control, audit logging, and responsible AI practices. This layer ensures ethical and compliant use of LLMs.

By aligning LLM strategy with these layers, companies gain a clearer understanding of the interdependencies across infrastructure, models, and business goals.

Which LLM Performance Metrics Matter For Enterprises?

While academic benchmarks like MMLU or BigBench have shaped perceptions of model quality, enterprises are beginning to define their own performance metrics.

Common benchmarks now include response latency, cost per output token, retrievability of accurate facts, and hallucination rate. Companies also track task-specific metrics, such as the percentage of correctly classified support tickets or the number of hours saved by automating contract review.

New evaluation platforms have emerged to support enterprise testing, such as the LMSYS arena and HELM from Stanford, which provide head-to-head comparisons of open and closed models.

Real-World Enterprise Use Cases

A growing number of companies are publishing results from internal LLM pilots.

In insurance, LLMs are being used to draft first-pass claim assessments by extracting key facts from photos and documents. In banking, models serve as internal assistants that search policy manuals or regulatory guidance. In pharmaceutical firms, LLMs summarize medical literature to support R&D.

Even industries slow to adopt new technology, such as manufacturing, are testing LLMs in maintenance and troubleshooting roles. Here, a retrieval-based system allows frontline workers to query decades of equipment documentation in plain language.

What’s Next? Agents, Multimodality, and Auto-Governance

The next evolution of enterprise LLMs is already taking shape.

First, models are becoming multimodal. This means they can accept and generate not just text, but also images, audio, and eventually video. This unlocks use cases in design, diagnostics, and digital twin systems.

Second, the rise of agentic systems means LLMs will no longer just answer questions but will take actions—triggering workflows, booking meetings, or updating systems. These capabilities raise new questions about oversight, liability, and human-in-the-loop requirements.

Third, a new layer of software is emerging around auto-governance. These tools monitor model outputs in real-time, check for compliance violations, and even rewrite prompts or responses to ensure alignment with policy.

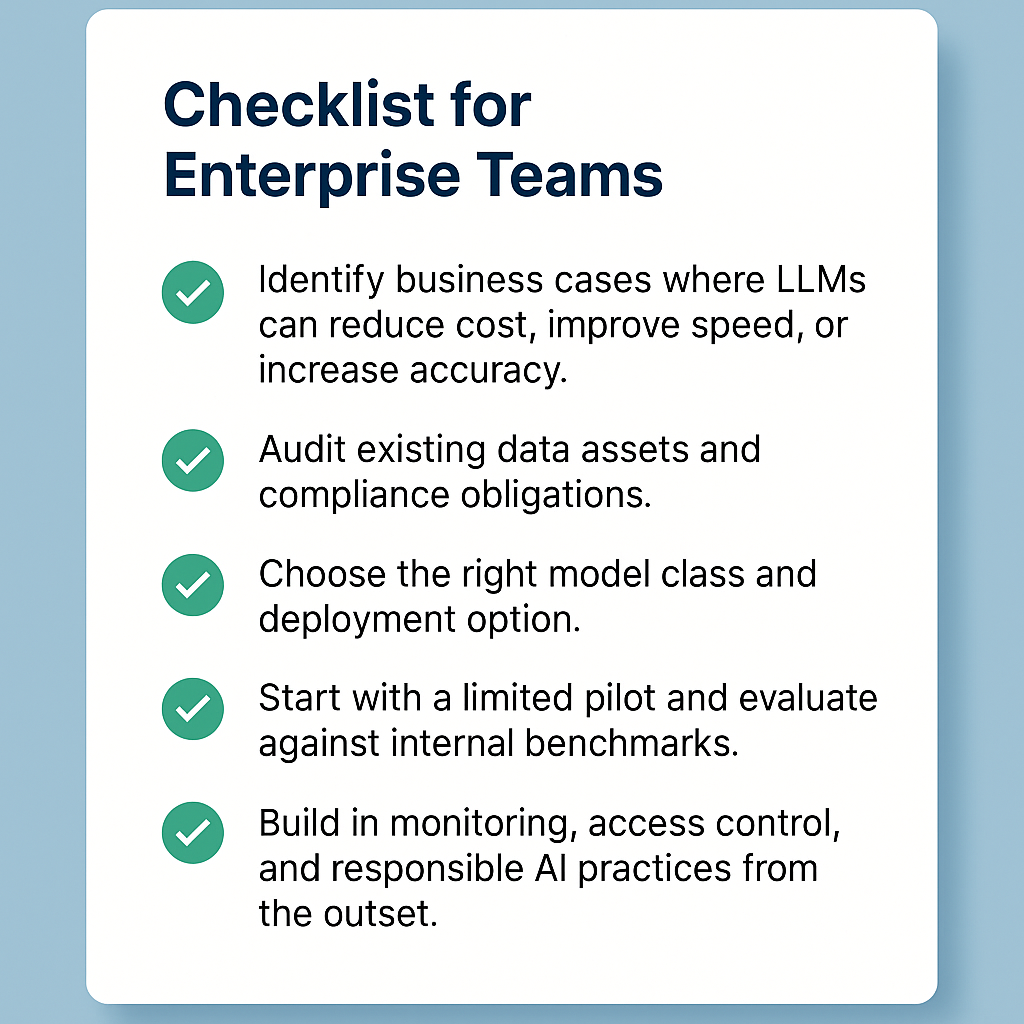

A Checklist for Enterprise Teams

So, what’s next for enterprises looking to integrate LLMs into their business and business processes?

For enterprises still exploring how to adopt LLMs, here’s a five-step check list of simple steps that can help you start your project, reduce risk and accelerate value:

- Identify business cases where LLMs can reduce cost, improve speed, or increase accuracy. Start by reviewing operational bottlenecks, repetitive cognitive tasks, and areas with frequent human error. Prioritize departments where automation can lead to immediate efficiency gains, such as customer service, compliance, or finance.

- Audit existing data assets and compliance obligations. Determine what internal data is available, how it is structured, and whether it is suitable for training or querying. Simultaneously, map out applicable legal requirements, industry regulations, and internal policies that govern data access and model use.

- Choose the right model class and deployment option. Based on use case complexity, latency needs, and sensitivity of data, decide between open-source or proprietary models and select a deployment path (API, VPC, or on-premise) that aligns with your risk tolerance and infrastructure readiness.

- Start with a limited pilot and evaluate against internal benchmarks. Select a controlled use case with clear metrics (e.g., time saved, cost reduced, accuracy improvement). Use this as a testbed to gather performance data and stakeholder feedback.

- Build in monitoring, access control, and responsible AI practices from the outset. Develop logging and audit trails to track model use, implement role-based access to reduce misuse, and embed fairness and transparency principles in the design and deployment phases. This helps ensure trust, traceability, and long-term maintainability.

As AI systems grow more complex and interconnected, enterprises need structured frameworks and clear market intelligence to guide decisions. AI Insider offers research and advisory support to help organizations navigate the full AI stack, identify strategic opportunities, and manage risk.

Our mission is to empower leaders with the clarity and foresight needed to build resilient, scalable, and future-ready AI systems.

Click here to download full version of AI Insider’s exclusive AI framework report.

For more information, or to connect with our analysts, contact us directly at hello@resonance.holdings