Insider Brief

- A study from Waseda University proposes that people exhibit attachment-like behaviors toward AI, such as seeking emotional support or maintaining distance, paralleling patterns seen in human relationships.

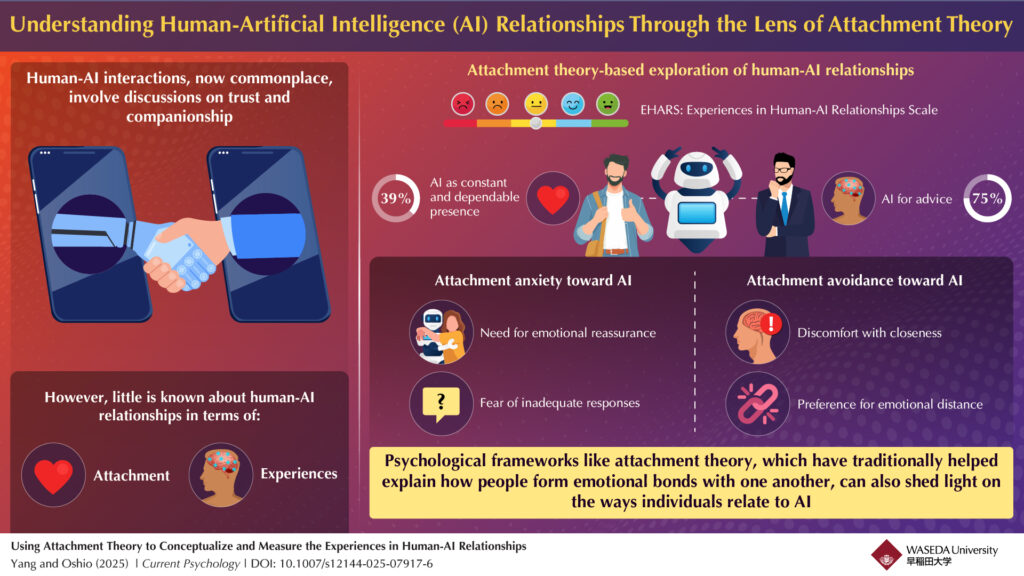

- Researchers developed a new tool, the Experiences in Human-AI Relationships Scale (EHARS), which revealed that 75% of users turn to AI for advice and 39% view it as a dependable presence.

- The findings suggest psychological models like attachment theory can guide ethical AI design, especially for mental health tools and companion technologies, while raising questions about emotional overdependence and manipulation.

As artificial intelligence becomes a fixture in daily life, researchers say people may begin to relate to AI not only as a tool, but as an emotional presence.

A study from Waseda University in Japan offers a new framework for understanding how humans interact with AI, suggesting that principles from attachment theory—typically used to explain emotional bonds between people—can also help explain how people respond to intelligent machines. According to Waseda, the findings, published in the journal Current Psychology, propose that patterns of attachment anxiety and avoidance seen in human relationships also appear in how people engage with AI systems.

The research team, led by Research Associate Fan Yang and Professor Atsushi Oshio of the Faculty of Letters, Arts and Sciences, developed a novel measure called the Experiences in Human-AI Relationships Scale (EHARS). In surveys of AI users, they found that around 75% had turned to AI systems for advice, and about 39% viewed the AI as a dependable, consistent presence. The university noted researchers identified two dimensions to this behavior: anxiety, which includes a desire for emotional reassurance and worry over unsatisfactory responses from AI; and contrasting high AI avoidance, which involves a preference for emotional distance and discomfort with close interaction.

“In recent years, generative AI such as ChatGPT has become increasingly stronger and wiser, offering not only informational support but also a sense of security,” Yang noted. “These characteristics resemble what attachment theory describes as the basis for forming secure relationships. As people begin to interact with AI not just for problem-solving or learning, but also for emotional support and companionship, their emotional connection or security experience with AI demands attention. This research is our attempt to explore that possibility.”

Researchers indicate these tendencies do not imply that users are forming true emotional attachments to machines. Instead, the researchers argue that familiar psychological models may be useful in understanding human-AI dynamics. The study builds on past work that examined trust and companionship but shifts the lens to emotional needs and behavioral patterns.

This research could influence how developers build AI companions or support tools for mental health. By accounting for different attachment tendencies, designers might tailor chatbots or digital assistants to offer more appropriate forms of emotional support—whether through empathetic interaction for those with high anxiety, or more reserved responses for users with avoidant tendencies.

The study also points to ethical questions, particularly for AI systems that mimic human relationships, such as romantic chatbots or elder care robots. Researchers suggest transparent design and limits on emotional simulation may be necessary to avoid overdependence or manipulation.

Conducted in two pilot studies and a main survey, the research establishes a baseline for future efforts to integrate emotional assessment into AI design. The EHARS tool itself may serve both developers and psychologists interested in evaluating user behavior and adjusting AI systems accordingly, researchers explained.

“As AI becomes increasingly integrated into everyday life, people may begin to seek not only information but also emotional support from AI systems,” Yang pointed out. “Our research highlights the psychological dynamics behind these interactions and offers tools to assess emotional tendencies toward AI.”

Yang added the research promotes “Lastly, it promotes” a better understanding of how humans connect with technology on a societal level, helping to guide policy and design practices that prioritize psychological well-being.”