Insider Brief

- Backed by the U.S. National Science Foundation and other academic funders, researchers from MIT and Stanford developed SketchAgent, an AI system that generates human-like sketches from text prompts using a stroke-by-stroke process.

- Unlike previous systems, SketchAgent uses a custom drawing language rather than human-drawn datasets, enabling it to sketch abstract concepts and collaborate with humans in real time.

- The system, powered by Claude 3.5 Sonnet, outperformed other models like GPT-4o in drawing clarity, though it still struggles with complex shapes and precision, with researchers planning interface improvements and synthetic data training to boost performance.

A new AI system draws like a human, opening new possibilities for sketch-based collaboration between people and machines.

Backed by the U.S. National Science Foundation, the U.S. Army Research Laboratory, and Stanford University’s Hoffman-Yee Grant, researchers from MIT and Stanford have created “SketchAgent,” a system that can turn natural language into sketches using a step-by-step drawing process that mimics how humans doodle. According to MIT, the tool is part of a broader research effort to make AI more intuitive and multimodal, allowing it to interact not just with text but with visuals in real time.

“Not everyone is aware of how much they draw in their daily life,” noted CSAIL postdoc Yael Vinker, who is the lead author of a paper introducing SketchAgent. “We may draw our thoughts or workshop ideas with sketches. Our tool aims to emulate that process, making multimodal language models more useful in helping us visually express ideas.”

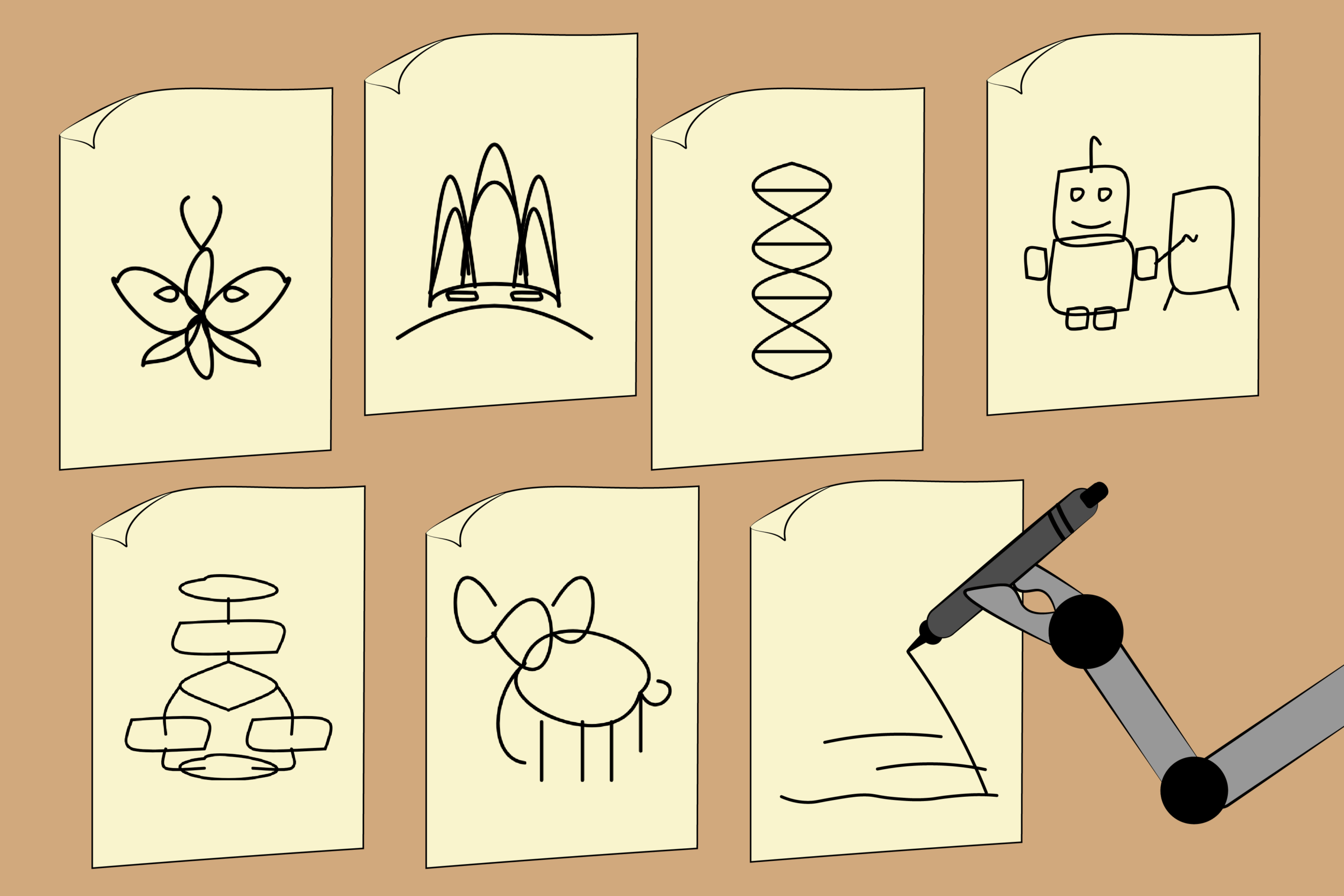

SketchAgent was designed without relying on human-drawn datasets. Instead, the team developed a custom sketching language that breaks down each concept into numbered strokes on a grid. These labeled strokes were used to teach the model how to draw items such as houses, robots, and abstract symbols like DNA helices. Using this framework, the researchers adapted large language models like Claude 3.5 Sonnet to generate line-by-line drawings from simple text descriptions.

In tests, SketchAgent successfully generated sketches of concepts it hadn’t encountered before. The system also demonstrated collaborative capability, drawing alongside humans in tandem sessions. When SketchAgent’s contributions were removed from finished sketches, the resulting images were often incomplete, highlighting the model’s active role in the final design.

Compared with other large multimodal models, SketchAgent using Claude 3.5 Sonnet outperformed versions built on GPT-4o and other Claude models in generating clear, recognizable vector graphics. The results suggest Claude 3.5 is especially adept at translating textual prompts into structured visual forms.

“The fact that Claude 3.5 Sonnet outperformed other models like GPT-4o and Claude 3 Opus suggests that this model processes and generates visual-related information differently,” noted co-author Tamar Rott Shaham.

Still, the system remains limited in its ability to render complex or stylistic images. SketchAgent struggles with realistic figures, logos, and detailed drawings, and sometimes misinterprets human contributions during collaborative sketches. In one test, for instance, the model mistakenly drew a bunny with two heads, a glitch the researchers attribute to how the model plans and sequences drawing tasks.

The team is exploring ways to improve the model’s performance using synthetic data from other AI image-generation systems and aims to enhance the user interface for more seamless interaction. The long-term vision is to create a drawing-based interface that allows users to communicate with AI through visuals, not just words.

Lead author Yael Vinker and co-authors from MIT and Stanford plan to present the system at the 2025 Conference on Computer Vision and Pattern Recognition (CVPR). The work was also supported by the Zuckerman STEM Leadership Program, Hyundai Motor Company, and a Viterbi Fellowship.

“As models advance in understanding and generating other modalities, like sketches, they open up new ways for users to express ideas and receive responses that feel more intuitive and human-like,” Rott Shaham pointed out. “This could significantly enrich interactions, making AI more accessible and versatile.”