Insider Brief

- UCLA researchers created MEME, an AI system that transforms electronic health record tables into text-based pseudonotes to improve emergency room decision-making.

- Tested on over 1.3 million ER visits, MEME outperformed traditional machine learning and specialized healthcare AI.

- By processing patient data in modular narrative blocks, MEME improves accuracy, cross-hospital adaptability, and compatibility with advanced language models.

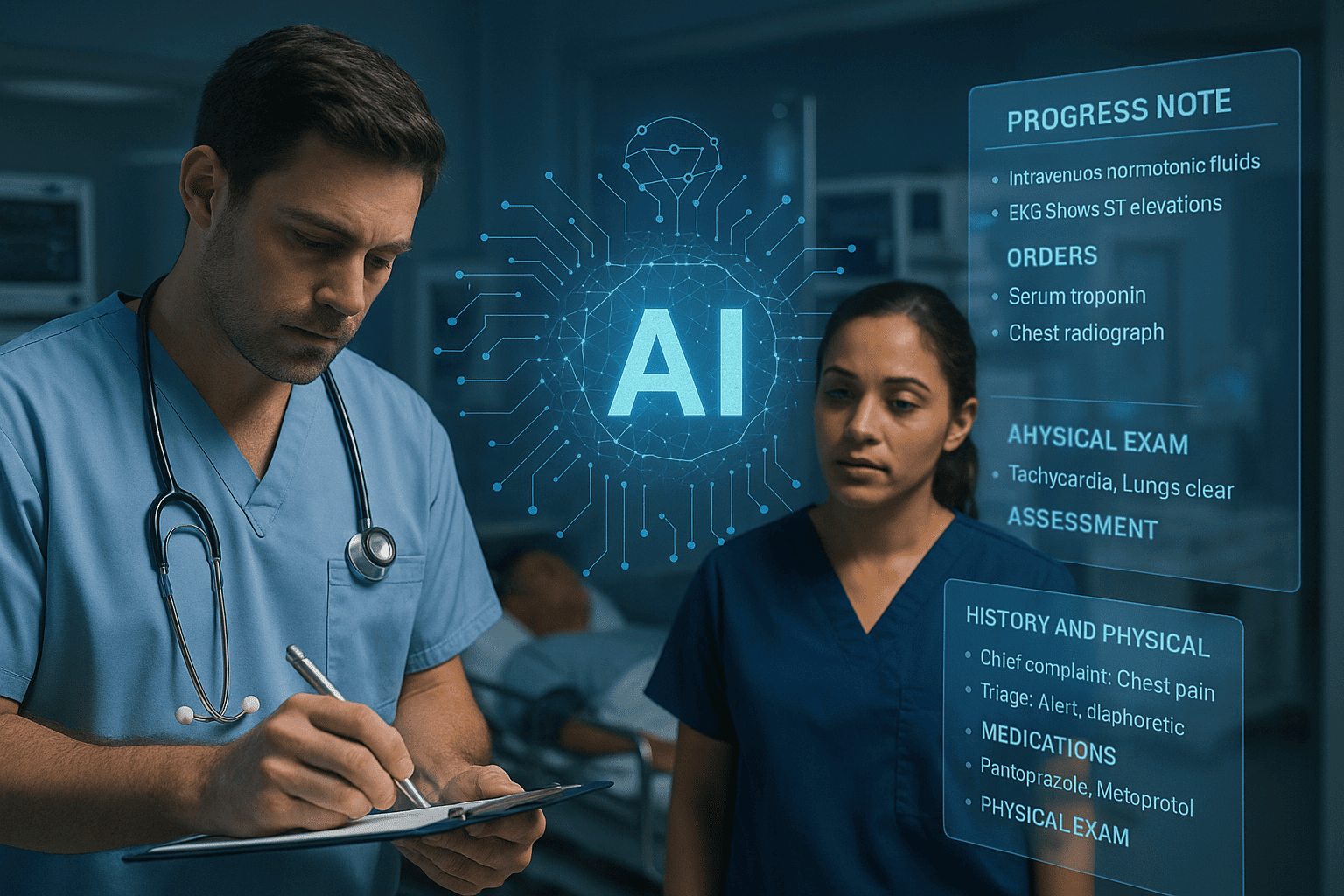

UCLA researchers have developed a new artificial intelligence system that converts fragmented patient data into readable narratives, enabling faster and more accurate clinical decision-making in emergency rooms. The project introduces a system called MEME, or Multimodal Embedding Model for EHR, which bridges a key gap between modern AI and hospital record systems.

Most AI models excel at processing written language, while electronic health records (EHRs) are stored in tables filled with codes, numbers, and categories that are difficult for these models to interpret. According to the researchers at UCLA, his mismatch has limited the usefulness of AI in clinical settings, especially in fast-paced environments like emergency departments. UCLA’s system addresses this problem by turning tabular data into text-based “pseudonotes” that resemble the summaries doctors typically write in patient files.

Researchers say the system works by organizing patient data into specific categories such as medications, lab results, diagnoses, and vitals. It then converts each category into short narrative blocks using clinical shorthand and simple sentence structures, effectively simulating how a doctor might summarize information. Each block is then fed separately into a language model, allowing the system to process multiple data streams while preserving their context.

Researchers tested MEME on more than 1.3 million patient visits using data from both UCLA hospitals and the widely used MIMIC database. The model was evaluated across several tasks common in emergency settings, such as predicting hospital admissions, identifying severe conditions, and recommending next steps. In all cases, MEME outperformed existing systems, including traditional machine learning models and healthcare-specific AI tools like CLMBR and Clinical Longformer.

One reason for MEME’s success is its modular structure, according to UCLA. Rather than trying to compress all patient data into a single stream, the system treats each category of information independently, improving clarity and accuracy. This approach also proved more adaptable across different hospital systems, showing promise for use in settings with varying data standards.

“This bridges a critical gap between the most powerful AI models available today and the complex reality of healthcare data,” said Simon Lee, PhD student at UCLA Computational Medicine. “By converting hospital records into a format that advanced language models can understand, we’re unlocking capabilities that were previously inaccessible to healthcare providers.”

Despite these gains, the study notes limitations. While MEME performed well across multiple sites, some drop-off in accuracy occurred when models were used in hospitals with different record-keeping systems.

UCLA indicated future work will focus on expanding the system beyond emergency care to other clinical domains, such as inpatient monitoring and outpatient diagnosis. Researchers also aim to refine how the AI handles unfamiliar medical concepts and integrate new types of data as they become available.

The full study can be accessed here.