Insider Brief

- A new report from New America finds that artificial intelligence is transforming military deception by enabling more precise misinformation while also introducing new vulnerabilities.

- AI systems can be misled by falsified data and may generate false conclusions on their own, making them both tools and targets of deception.

- The study warns that quantum computing could further disrupt deception strategies by breaking encryption or accelerating data manipulation.

Artificial intelligence is becoming both a powerful tool and a serious liability in the evolving art of military deception, according to a new report that examines lessons from the war in Ukraine.

The study, published by the think tank New America and co-authored by former Australian Army officer Mick Ryan and strategist P.W. Singer, identifies AI as one of the most disruptive forces reshaping the future battlefield. From planning ambushes to misleading algorithms, the report argues that AI is enabling deception on new scales — while also being susceptible to it in novel and potentially dangerous ways, according to a panel discussion with the authors, as covered by National Defense Magazine.

Smart Systems, Easier to Fool

Militaries have long relied on deception to mislead adversaries, but, as AI systems are increasingly tasked with analyzing battlefield data, identifying patterns, and even recommending actions, the targets of deception are no longer just humans—they’re machines.

The report finds that AI can be tricked by manipulated sensor data, falsified signals, or patterns designed to exploit its underlying assumptions. Unlike traditional reconnaissance teams, which can often apply intuition or skepticism, automated systems may interpret corrupted data as fact, resulting in flawed conclusions or misdirected responses.

In one case highlighted in the broader study of the Ukraine conflict, Russia and Ukraine repeatedly caught each other off guard despite the use of drones, satellites, and AI-driven surveillance tools. This, the report says, underscores how digital decision-making systems can become part of the deception loop, both as perpetrators and victims.

AI as a Weapon of Deception

AI isn’t just vulnerable to deception; it can also be used to carry it out, the analysts suggest. The report notes that militaries are already leveraging machine learning to create more effective decoys, simulate false patterns of troop movement, and microtarget disinformation campaigns.

One emerging tactic involves using AI to analyze the beliefs, habits, and vulnerabilities of specific leaders or military planners. That information can then be used to craft messages, fake documents, or digital signals tailored to manipulate decision-makers at a personal level. This precision deception, made possible by data-driven profiling, is far more sophisticated than traditional propaganda.

Microtargeting campaigns—once the domain of advertisers—are now being explored for battlefield influence operations, the report suggests. AI allows military planners to sift through massive troves of intercepted communication, satellite imagery, and digital behavior to determine not just what an adversary is doing, but what they think they’re seeing.

AI + Quantum: A New Frontier of Risk

The study also flags quantum computing as a complementary—and complicating—factor. Quantum systems, while still emerging, have the potential to dramatically increase the speed and scale of AI operations. They could process simulations, decode intercepted signals, and even identify false information patterns faster than conventional systems.

But that same capability could undermine AI-driven deception efforts. If an adversary uses quantum tools to break current encryption standards, they may gain access to protected data streams that support misinformation tactics. Worse, quantum-enhanced decryption could expose military plans that rely on AI-generated misdirection.

At the same time, AI models themselves can “hallucinate” or generate false information based on flawed data or overly confident assumptions. This creates a situation where machines can deceive themselves—undermining decision-making in ways that may not be immediately visible to human overseers.

Civilian Tech, Military Stakes

One of the study’s most pressing concerns is how accessible these technologies have become. The low cost and wide availability of commercial AI tools mean that state and non-state actors alike can now build deception operations without the backing of large defense budgets.

The report notes that authoritarian regimes like Russia and China treat deception as a core military function and are actively integrating AI into operations. In contrast, Western militaries often treat deception as an afterthought, highlighting it in strategy documents, but underinvesting in practice, training, or doctrinal support.

This creates a growing asymmetry, described in the report as a “deception gap,” in which rivals may move faster and with fewer ethical or institutional constraints. AI is not just speeding up traditional warfare—it’s amplifying the importance of deception while exposing new weaknesses.

Rewiring Strategy for the Algorithmic Battlefield

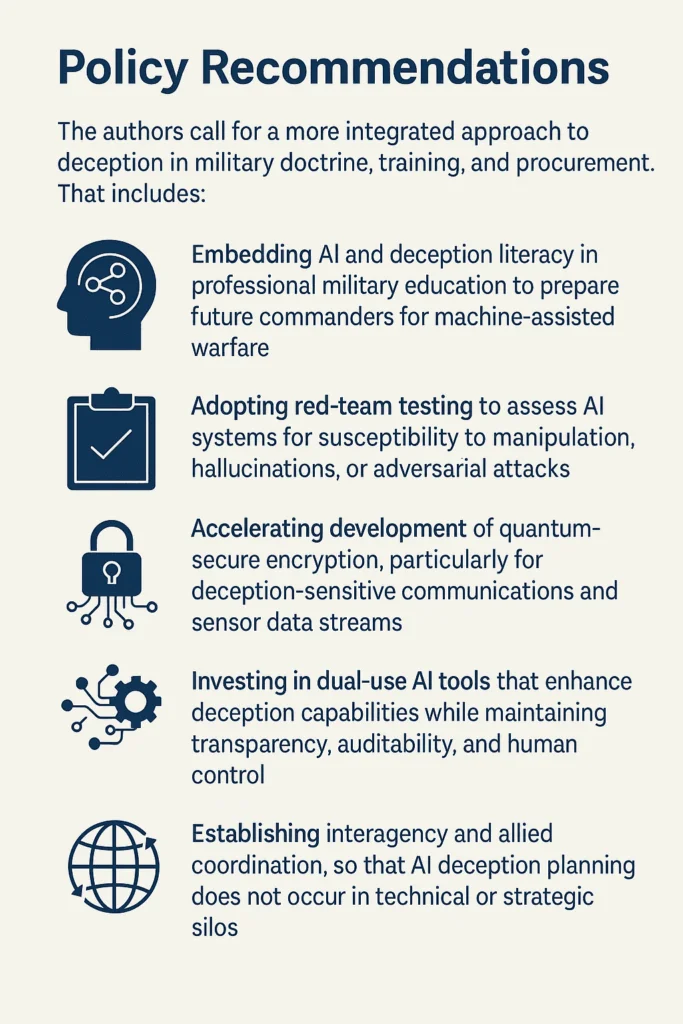

To close that gap, the authors argue that democratic militaries must update their approach to technology-driven deception. That means integrating AI literacy into officer training, adapting acquisition systems to support rapid iteration, and building safeguards that recognize the limits of machine judgment.

AI can analyze patterns, plan scenarios, and even simulate adversary behavior. But the report makes clear that it cannot distinguish truth from manipulation without human oversight. In the wrong hands — or under flawed assumptions — AI can help create illusions that even its creators believe.

As warfare becomes more algorithmic, the question is no longer whether AI will be part of deception, but whether military leaders can keep up with the speed and subtlety of machines trained to deceive.

Limitations

While the report draws extensively on the Ukraine conflict, it acknowledges that the case studies are context-dependent and may not generalize to other theaters of war. The technologies discussed — especially quantum computing — are at different levels of maturity and may not be deployed at scale in the near term. The study also does not quantify the effectiveness of AI-enabled deception versus traditional methods, nor does it model failure rates or unintended consequences in complex battlefield environments.

The analysts stops short of assessing legal or ethical constraints that may affect the use of these technologies, particularly in democracies. It also does not fully address how militaries should handle AI hallucinations or false positives in high-stakes decisions.

You can read the report in depth here.