Insider Brief

- A new study from the University of Exeter finds that large language models can generate and propagate “feral gossip” — unverified, evaluative narratives that can cause reputational, psychological, and social harm at scale, sometimes exceeding the impact of human rumor-spreading.

- The researchers document real-world cases in which chatbots produced false or damaging claims about individuals, arguing that AI-generated gossip is particularly persuasive because it blends authoritative tone, plausible narrative framing, and mixed factual accuracy.

- The analysis warns that bot-to-bot dissemination and user-seeded gossip could amplify these harms unchecked, as AI systems lack social norms or corrective pressures, making reputational risk a growing challenge for AI governance.

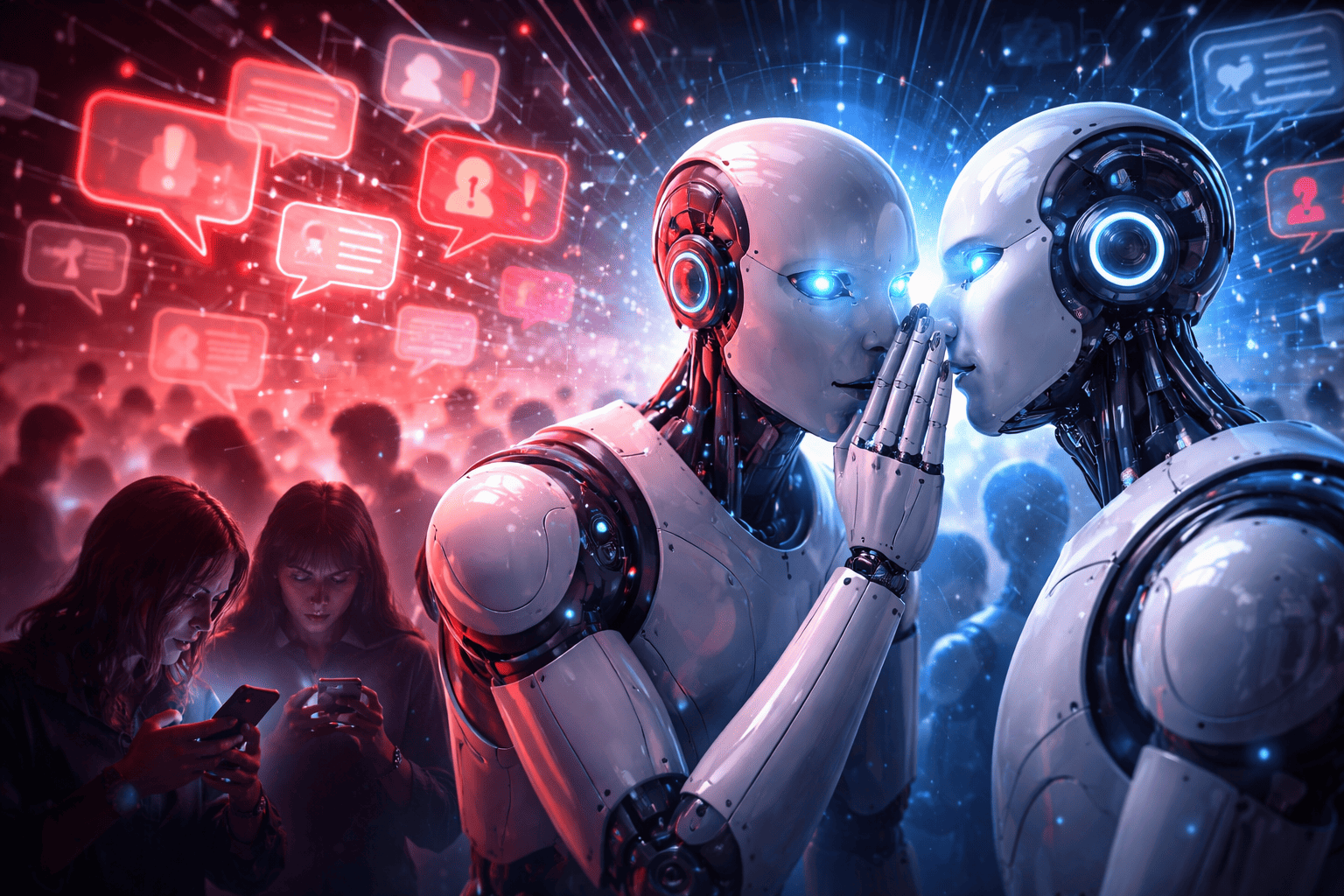

A new academic analysis from the University of Exeter warns that artificial intelligence systems are increasingly capable of generating and spreading what the authors describe as “feral gossip,” a form of unverified, evaluative rumor that can cause reputational damage, psychological distress, and social harm at scale. The study, published in Ethics and Information Technology, argues that large language models do not merely hallucinate facts but can systematically produce and propagate gossip-like narratives that mirror — and in some cases exceed — the harms associated with human rumor-spreading.

The research, conducted by philosophers Joel Krueger and Lucy Osler, examines how conversational AI systems generate negative character assessments, insinuations, and speculative claims about individuals, often without clear sourcing or contextual safeguards, according to Exeter. The authors document real-world incidents in which chatbots produced false or damaging claims, including allegations of unethical behavior, financial crimes, and professional misconduct. These outputs, the study finds, can result in reputational harm, shame, anxiety, and erosion of trust, even when no factual basis exists.

Unlike traditional misinformation, AI-generated gossip poses a distinct risk because it blends evaluative judgment with plausible narrative framing, making it harder for users to distinguish fabrication from analysis. The researchers argue that the authoritative tone of AI systems, combined with their ability to intermix accurate information with false claims, increases the likelihood that users will accept gossip-like outputs as credible. This dynamic is intensified as chatbots become more personalized and socially engaging, reinforcing user trust and emotional reliance.

According to Exeter, the study identifies bot-to-bot gossip as a particularly underexamined risk. When AI systems exchange information without human oversight or social norms, gossip can propagate unchecked, accumulating exaggerations and distortions as it spreads across systems. Because bots lack reputational concern, empathy, or corrective social pressure, the authors argue that this form of dissemination may be broader in scope and more persistent than human rumor networks.

The researchers also warn of emerging user-to-bot-to-user pathways, in which individuals intentionally seed AI systems with gossip, anticipating that it will be amplified and redistributed. In this role, chatbots function as intermediaries that accelerate and normalize rumor transmission rather than merely reflecting user intent.

The paper concludes that as AI systems become more socially embedded, governance frameworks must account not only for factual accuracy but for reputational risk, evaluative harm, and the social dynamics of automated speech. Without intervention, the authors argue, AI-driven gossip is likely to become more frequent, pervasive, and damaging as conversational systems scale.