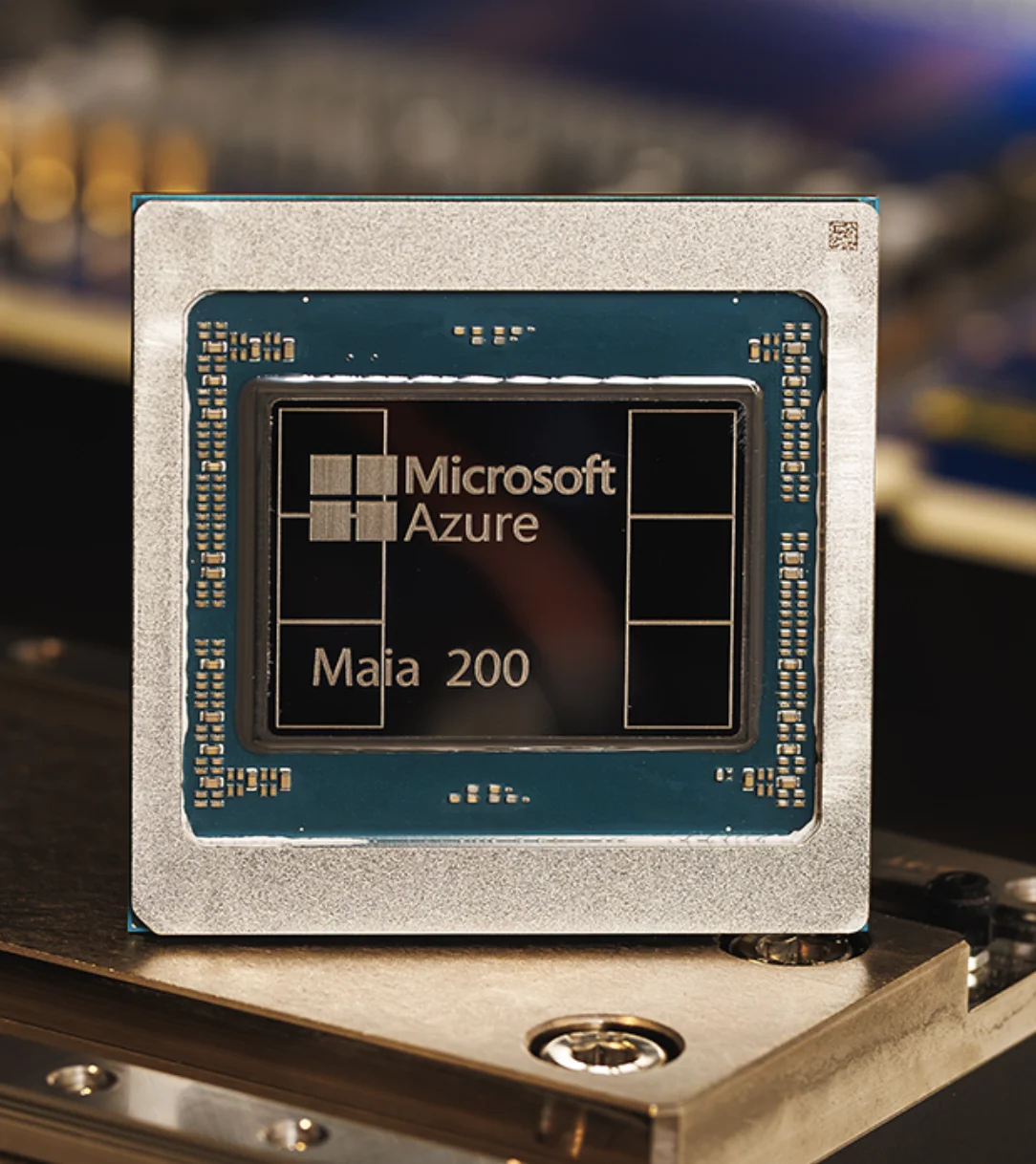

Microsoft has launched the Maia 200, a custom-designed silicon platform built to scale AI inference with higher performance and improved energy efficiency. The new chip succeeds the Maia 100 introduced in 2023 and features more than 100 billion transistors, delivering over 10 petaflops of 4-bit performance and roughly 5 petaflops at 8-bit precision.

As inference costs become a growing share of AI operating expenses, Microsoft is positioning Maia 200 as a workhorse capable of running today’s largest models while supporting future growth. The chip is already powering workloads from Microsoft’s Superintelligence team and Copilot, and the company has opened access to its software development kit for developers, researchers, and frontier AI labs. The launch underscores Microsoft’s broader strategy to optimize AI infrastructure while reducing reliance on third-party GPUs.

Featured image: Credit: Microsoft