Insider Brief:

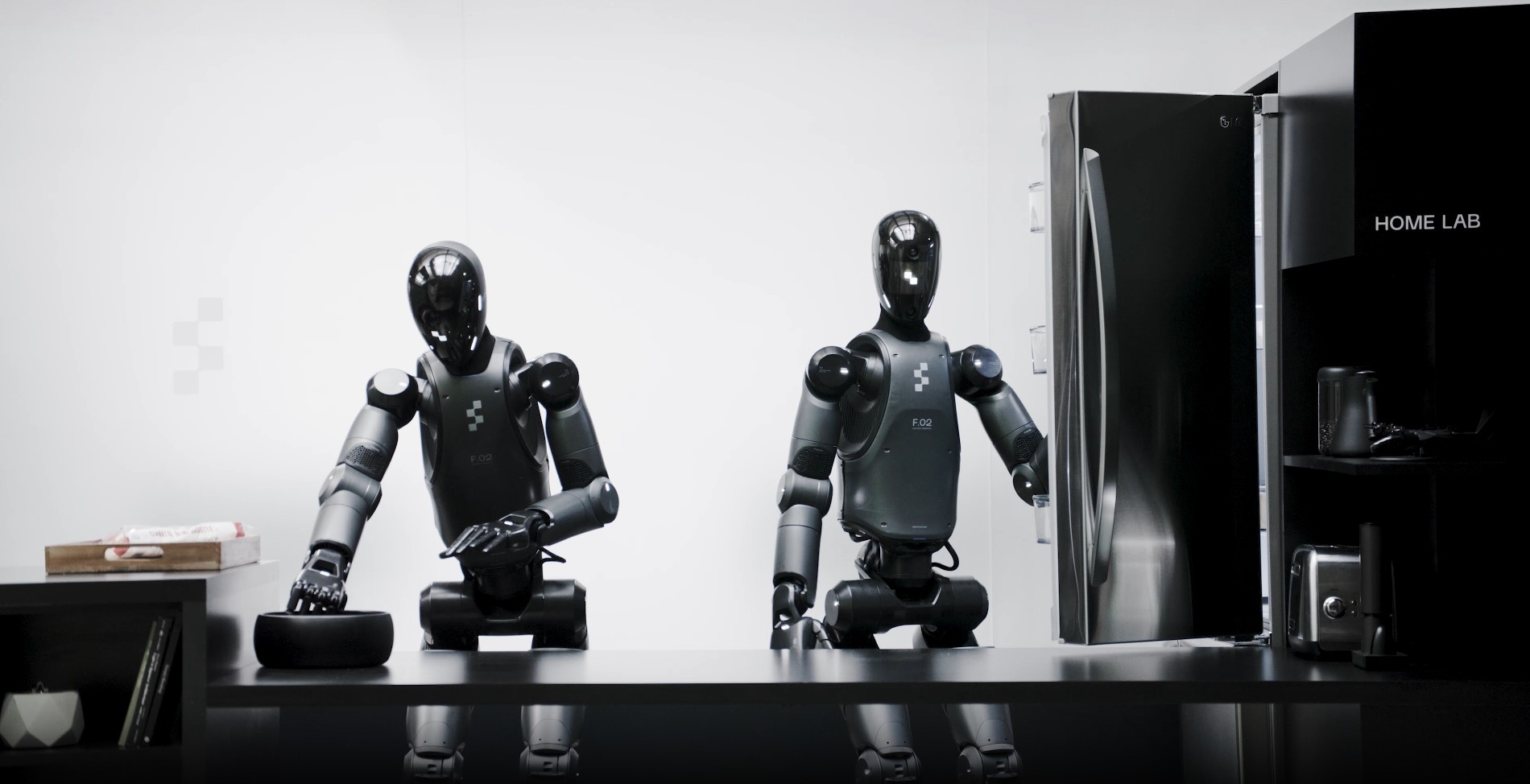

- Figure introduced Helix, a Vision-Language-Action (VLA) model that enables humanoid robots to perceive, understand language, and perform dexterous motor tasks without extensive training.

- Helix allows robots to manipulate objects, collaborate with other robots, and generalize tasks using a single neural network, eliminating the need for task-specific fine-tuning.

- The model operates on a dual-system architecture, combining a Vision-Language Model (S2) for scene understanding with a high-speed motor control system (S1) for real-time execution.

- Helix runs efficiently on embedded GPUs, making it commercially viable for deployment in homes, warehouses, and other real-world environments.

PRESS RELEASE — In a recent post, the robotics startup Figure has revealed Helix, a Vision-Language-Action (VLA) model designed to enhance humanoid robot capabilities by integrating perception, language understanding, and motor control. Unlike traditional approaches that require extensive manual programming or thousands of demonstrations, Helix enables robots to pick up and manipulate unfamiliar objects, collaborate on complex tasks, and respond to natural language commands in real-time.

Key Features of Helix

- Full Upper-Body Control – Helix allows robots to control their wrists, fingers, torso, head, and arms with precision, enabling dexterous object manipulation.

- Multi-Robot Collaboration – Robots using Helix can work together on tasks, such as sorting groceries, without predefined role assignments.

- Generalized Object Handling – Equipped robots can pick up and interact with thousands of household objects they have never encountered before.

- Single Neural Network – Unlike previous models requiring specialized training for each task, Helix learns all behaviors with a single model and no task-specific fine-tuning.

- Commercial Deployment-Ready – Helix runs entirely on embedded GPUs, making it efficient for real-world use.

A New Precedent for Home Robotics

Robots have traditionally struggled with unstructured environments, such as homes, where objects vary in shape, texture, and placement. Teaching robots new tasks has required either manual programming by experts or vast amounts of training data—both costly and impractical for scaling. Helix overcomes these limitations by allowing instant generalization, meaning robots can learn new tasks on the fly simply by understanding verbal instructions.

Helix is based on a dual-system architecture inspired by cognitive models of decision-making:

System 2 (S2): Language and Scene Understanding

- Uses a Vision-Language Model (VLM) to interpret visual input and natural language commands.

- Processes scenes at 7-9 frames per second (Hz) to enable broad generalization across objects and tasks.

System 1 (S1): Fast Motor Control

- Translates S2’s high-level understanding into precise continuous robot actions at 200 Hz, ensuring real-time adjustments.

- Coordinates hand, wrist, and torso movements, allowing robots to pick up objects and interact smoothly.

This structure allows Helix to reason at a high level while executing fast, fine-grained motor adjustments, making it ideal for collaborative and dexterous tasks.

Generalization Without Fine-Tuning

Unlike existing models that require dedicated fine-tuning for each new task, Helix learns all behaviors from a single training stage using 500 hours of high-quality supervised data. This includes a variety of tasks such as:

- Opening and closing drawers and refrigerators

- Picking and placing objects in containers

- Handing items between robots

- Zero-Shot Object Handling and Multi-Robot Coordination

Helix-powered robots recognize and manipulate objects they have never seen before, simply by being told to do so. In systematic tests, robots successfully grasped thousands of objects without additional training. Helix also enables seamless coordination between multiple robots, allowing them to work together using only natural language prompts.

Optimized for Real-World Deployment

Helix runs efficiently on low-power embedded GPUs, making it viable for commercial applications. Its real-time control pipeline ensures robots can adapt quickly in dynamic environments, maintaining smooth interactions while responding to unexpected changes.

Helix is undeniable progress towards scaling intelligent, dexterous robots for real-world use. By unifying language understanding with fine motor control, it sets the precedent for general-purpose humanoid robots capable of assisting in homes, warehouses, and beyond. Figure plans to continue scaling Helix, pushing the boundaries of what robots can do with real-time, language-driven intelligence.