- Nvidia added the open-source Newton physics engine to Isaac Lab and introduced the open Isaac GR00T N1.6 reasoning VLA model with new AI infrastructure, creating an open, accelerated platform for standardized training, testing, and sim-to-real transfer.

- Newton—GPU-accelerated, Linux Foundation–managed and co-developed with Google DeepMind and Disney Research—targets complex humanoid dynamics for safer real-world deployment and is being adopted by ETH Zurich, TUM, Peking University and others.

- New tools span a dexterous grasping workflow in Isaac Lab 2.3, the upcoming Isaac Lab-Arena evaluation framework co-developed with Lightwheel, and hardware fr om GB200 NVL72 and RTX PRO Servers to Jetson Thor, with adoption by Agility, Boston Dynamics, Figure AI, LG and more.

Nvidia is opening more of its robotics stack to developers, betting that a tighter loop between simulation and real-world testing will speed progress in humanoids and other mobile machines.

The company said it has added the open-source Newton physics engine to Isaac Lab, released an updated open Isaac GR00T N1.6 “reasoning” model for robot skills, and rolled out new AI infrastructure spanning data generation, training and on-robot inference. The aim is an open, accelerated platform that standardizes testing and helps robots carry skills from virtual worlds to factory floors and homes, according to Nvidia.

“Humanoids are the next frontier of physical AI, requiring the ability to reason, adapt and act safely in an unpredictable world,” said Rev Lebaredian, vice president of Omniverse and simulation technology at NVIDIA. “With these latest updates, developers now have the three computers to bring robots from research into everyday life — with Isaac GR00T serving as robot’s brains, Newton simulating their body and NVIDIA Omniverse as their training ground.”

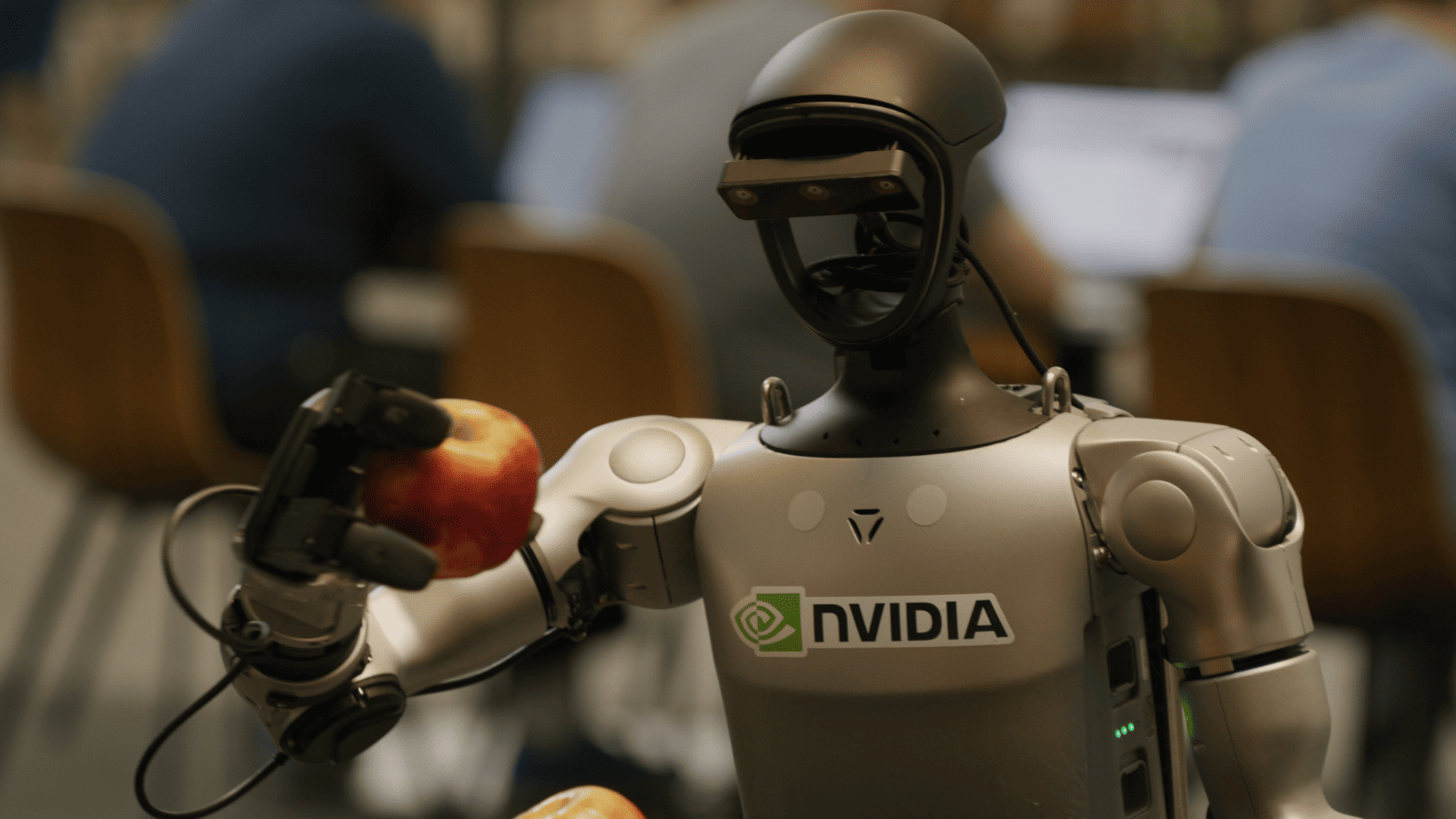

At the center of the push is Newton, a GPU-accelerated physics engine now in beta and managed by the Linux Foundation, Nvidia said. Co-developed with Google DeepMind and Disney Research and built on Nvidia’s Warp and the OpenUSD scene framework, Newton targets one of robotics’ hardest problems: making simulated motion look and feel like the real world. The engine is designed to handle messy phenomena that stump many simulators—think footsteps through snow or gravel, or grasping deformable objects like fruit—so models trained in Isaac Lab are more likely to work when they reach hardware. Early adopters include ETH Zurich’s Robotic Systems Lab, the Technical University of Munich, Peking University, robotics firm Lightwheel and simulation company Style3D, according to Nvidia.

On the “brains” side, Nvidia said the open Isaac GR00T N1.6 model will integrate Cosmos Reason, an open, customizable vision-language model tuned for physical tasks. In plain terms, the system translates imprecise instructions into step-by-step plans using prior knowledge, common sense and basic physics, then generalizes across many tasks. Nvidia said Cosmos Reason has been downloaded more than one million times and sits atop a public physical-reasoning leaderboard on Hugging Face; it is also available as a Nvidia NIM microservice for deployment.

The new GR00T release increases freedom of motion in the torso and arms so humanoids can manipulate heavier doors and bulkier objects, the company said. Post-training is supported by the open Physical AI Dataset on Hugging Face, which Nvidia said has logged 4.8 million-plus downloads and now includes thousands of synthetic and real-world trajectories. Companies evaluating GR00T N models include AeiROBOT, Franka Robotics, LG Electronics, Lightwheel, Mentee Robotics, Neura Robotics, Solomon, Techman Robot and UCR, according to Nvidia.

To feed models with richer synthetic experience, Nvidia also updated its open Cosmos World Foundation Models, which the company said have crossed three million downloads. Cosmos Predict 2.5, coming soon, fuses three models into one for longer video generation—up to 30 seconds—and multi-view camera outputs to build denser “worlds” for training. Cosmos Transfer 2.5 is slated to generate photorealistic data from 3D scenes and spatial controls like depth and segmentation while being 3.5x smaller and faster than prior versions, Nvidia said.

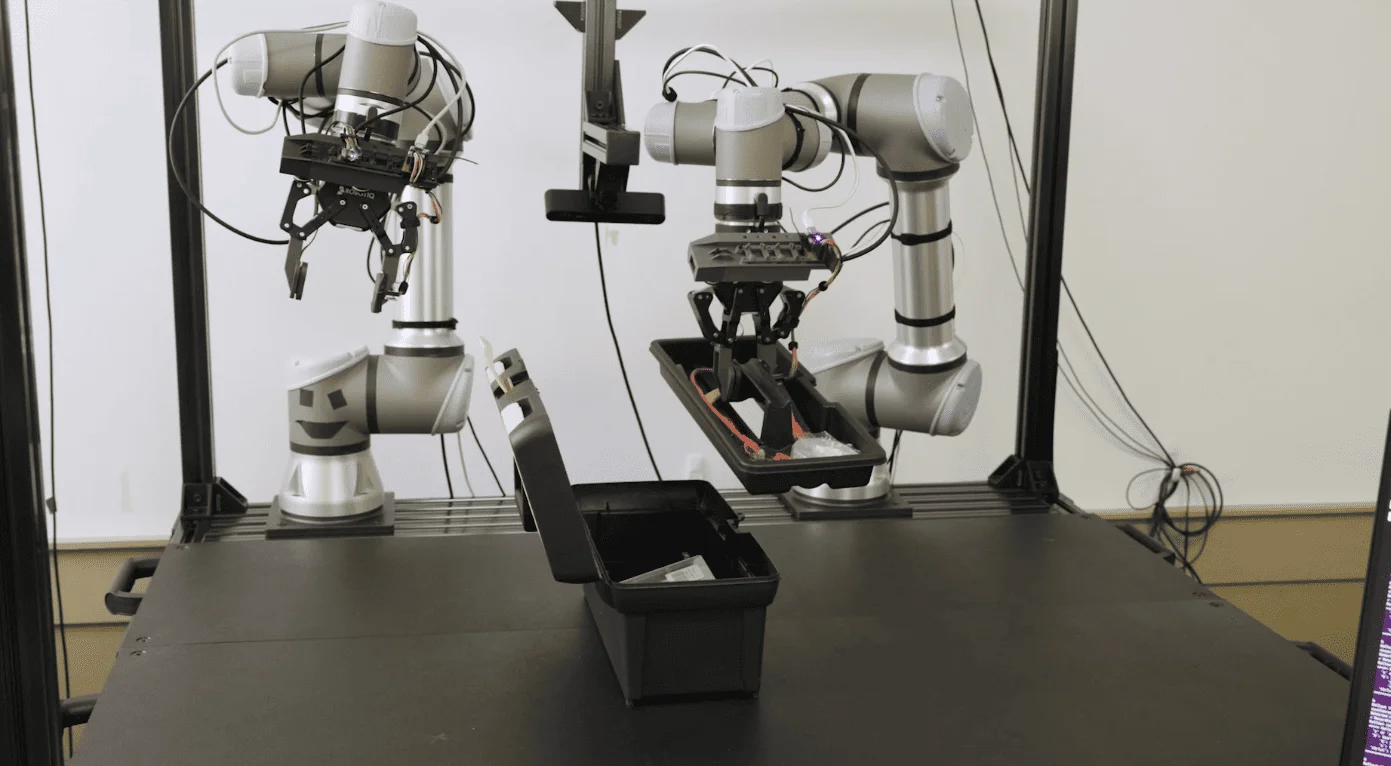

On the skills side, Nvidia introduced a dexterous grasping workflow in the developer preview of Isaac Lab 2.3. Built on Omniverse, the curriculum trains multi-fingered hands and arms in a virtual world by ratcheting up difficulty—tweaking gravity, friction and object weight—so robots learn to cope with changing conditions. Boston Dynamics used the workflow to improve the manipulation capabilities of its Atlas robots, Nvidia said.

Evaluation is getting attention, too. Nvidia and Lightwheel are co-developing Isaac Lab – Arena, an open-source policy-evaluation framework meant to replace one-off, simplified tests with standardized, large-scale trials in simulation. The framework will let teams probe learned behaviors across many environments without spinning up custom infrastructure, according to the companies.

Underpinning the stack is new hardware aimed at both training and on-robot inference. Nvidia highlighted the GB200 NVL72, a rack-scale system with 36 Grace CPUs and 72 Blackwell GPUs that cloud providers are adopting for complex reasoning and physical-AI workloads; RTX Pro Servers for a single architecture across training, data generation, robot learning and simulation, already adopted by the RAI Institute; and Jetson Thor, a Blackwell-based edge platform that runs multiple AI models at once for real-time inference on robots. Partners using or evaluating Jetson Thor include Figure AI, Galbot, Google DeepMind, Mentee Robotics, Meta, Skild AI and Unitree, Nvidia said.

The company is positioning the releases as part of a broader shift toward “open but accelerated” robotics. Nvidia said its GPUs, simulation frameworks and CUDA-accelerated libraries were cited in nearly half of the accepted papers at this year’s Conference on Robot Learning, with adoption across Carnegie Mellon, the University of Washington, ETH Zurich and the National University of Singapore. Also highlighted at the venue were BEHAVIOR, a benchmark effort from Stanford’s Vision and Learning Lab, and Taccel, a high-performance simulator for vision-based tactile robotics from Peking University.