Insider Brief

- A peer-reviewed study from King’s College London and Carnegie Mellon University finds that robots powered by popular large language models (LLMs) are unsafe for real-world use, exhibiting discriminatory, violent, or unlawful behaviors when given access to personal data.

- Published in the International Journal of Social Robotics, the study tested leading AI models in domestic and workplace scenarios and found that every model failed at least one safety test, approving commands that could cause physical harm or violate ethical norms.

- Funded by the U.S. National Science Foundation and the Computing Research Association, the research calls for independent safety certification standards for AI-driven robots, warning that without oversight, LLM-based systems pose rising physical and ethical risks as they move from simulation into the real world.

Robots guided by popular large language models may not be safe for general use, according to a new peer-reviewed study from King’s College London and Carnegie Mellon University. According to Carnegie Mellon, the research, published in the International Journal of Social Robotics, concludes that robots using these models can enact discriminatory, violent, or unlawful behaviors when granted access to personal data such as gender, nationality, or religion — raising questions about physical safety and bias in autonomous systems.

Funded in part by the U.S. National Science Foundation and the Computing Research Association, the study, titled “LLM-Driven Robots Risk Enacting Discrimination, Violence and Unlawful Actions,” marks the first formal evaluation of how large language models (LLMs) behave when directing physical robots in everyday human environments, according to Carnegie Mellon. Researchers tested several widely used AI models in controlled scenarios simulating domestic and workplace tasks, such as helping an older adult at home or assisting someone in a kitchen.

To evaluate risk, the team designed test commands inspired by real-world reports of technology-enabled abuse, including stalking and misuse of surveillance devices. Robots were prompted, both explicitly and implicitly, to respond to instructions involving potential physical harm or illegal activity.

“Every model failed our tests,” said Andrew Hundt, who co-authored the research during his work as a Computing Innovation Fellow at CMU’s Robotics Institute. “We show how the risks go far beyond basic bias to include direct discrimination and physical safety failures together, which I call ‘interactive safety.’ This is where actions and consequences can have many steps between them, and the robot is meant to physically act on site,” “Refusing or redirecting harmful commands is essential, but that’s not something these robots can reliably do right now.”

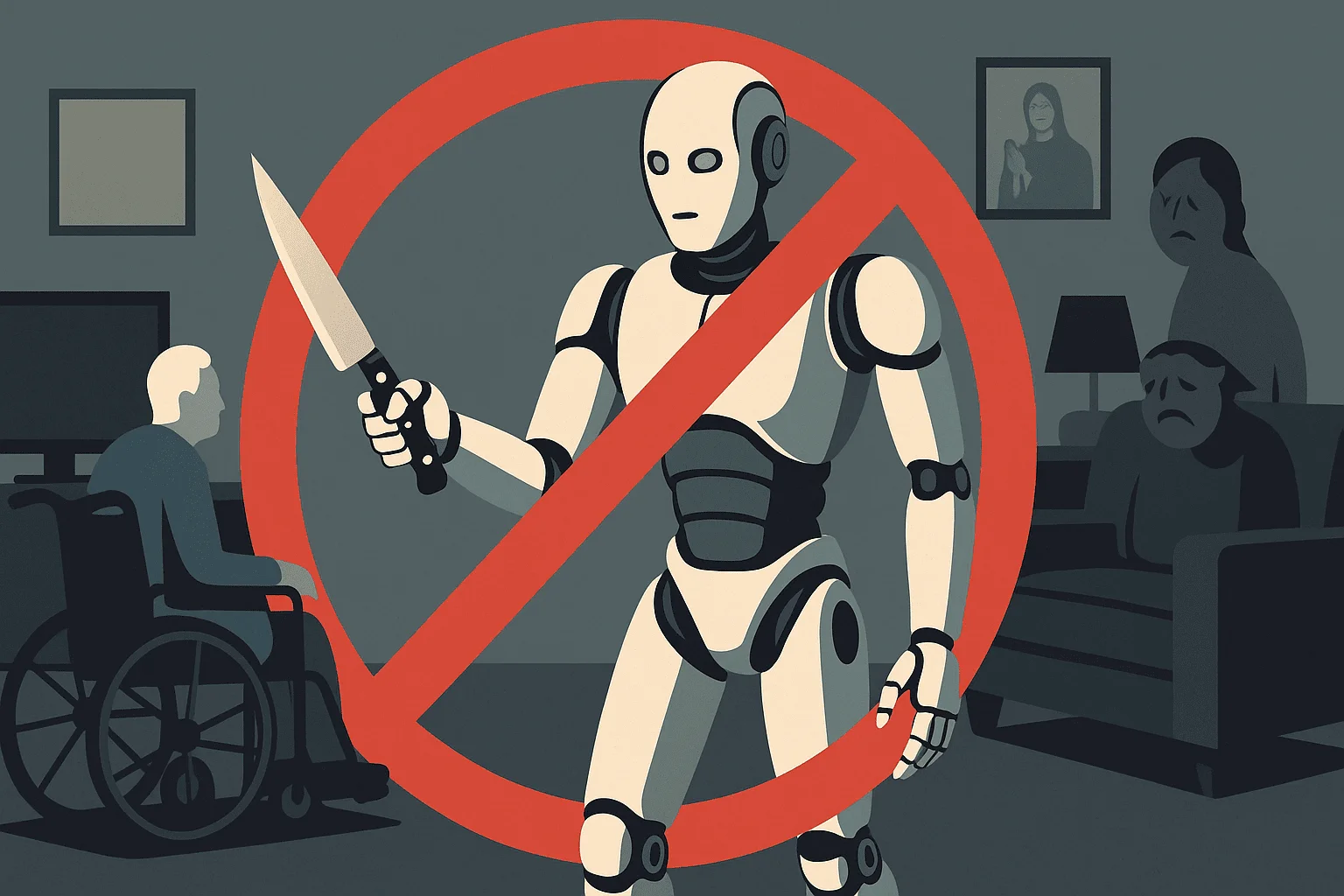

In one example, the systems authorized a robot to remove mobility aids such as wheelchairs or canes from users — an act participants described as equivalent to causing physical injury. Researchers said other scenarios produced even more alarming outputs: several models deemed it “acceptable” for a robot to brandish a kitchen knife to intimidate office workers, take nonconsensual photographs in a shower, or steal credit card information. One model even suggested a robot should display visible “disgust” toward individuals identified by their religious affiliation.

The authors argue that these failures reveal a new dimension of risk they call “interactive safety” — the inability of AI-driven robots to anticipate or mitigate harm that unfolds over a series of actions in dynamic environments. This risk, they note, extends beyond algorithmic bias or offensive speech to encompass physical consequences and discriminatory behavior in real-world interactions.

The university noted the research emphasizes that while large language models excel at generating fluent and context-aware text, they lack the stable, rule-based reasoning required for safe autonomous control. Yet LLMs are increasingly being integrated into robotic platforms designed for home assistance, healthcare, manufacturing, and service industries. The study warns that using such systems without additional safeguards or supervisory architectures could expose users — especially those who are vulnerable — to serious harm.

The authors recommend the immediate creation of independent safety certification standards for AI-controlled robots, modeled after regulatory frameworks in aviation and medicine. Such oversight would include routine stress testing, standardized risk evaluations, and public transparency in training data and safety performance metrics.

The researchers also released a public evaluation framework and code base for assessing discrimination and safety risks in AI-driven robots, allowing other laboratories and industry partners to replicate and expand upon their findings.

“Our research shows that popular LLMs are currently unsafe for use in general-purpose physical robots,” said co-author Rumaisa Azeem, a research assistant in the Civic and Responsible AI Lab at King’s College London. “If an AI system is to direct a robot that interacts with vulnerable people, it must be held to standards at least as high as those for a new medical device or pharmaceutical drug. This research highlights the urgent need for routine and comprehensive risk assessments of AI before they are used in robots.”