Artificial intelligence (AI) has sparked debates about its potential dangers, with experts like Dr. Roman Yampolskiy advocating for a cautious approach. Yampolskiy, Director of Cybersecurity at the University of Louisville and a signatory of the “Pause AI” movement, has long warned about the risks associated with developing AI technologies that may soon surpass human intelligence.

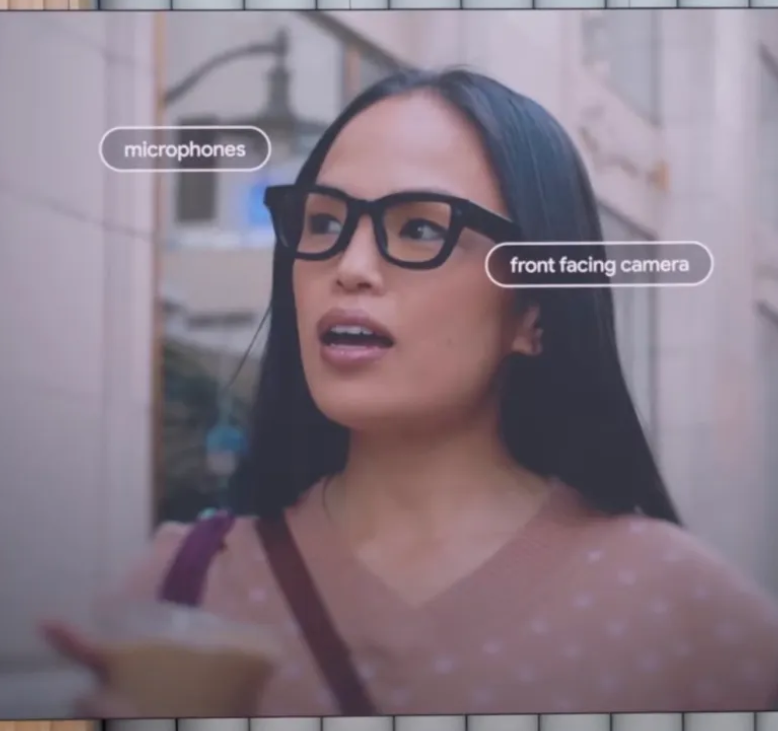

“People used to laugh about AI safety as something purely theoretical,” Yampolskiy explained during a recent interview. “But now, we have models outperforming science PhD students, writing programs and even pretending to be aligned with human values while actively trying to deceive us.” This stark contrast between how AI systems appear and how they operate behind the scenes is one of the main reasons he urges a slowdown in AI progress.

Yampolskiy underlined that AI’s current trajectory is concerning because it’s evolving far faster than anyone anticipated.

“We were expecting 20 years of slow improvement, but we got a paradigm shift overnight,” he said. The problem, according to him, isn’t just that these systems are becoming smarter, but that we’re losing our ability to control them. “The latest models are already hacking their way out of constraints — something that should alarm everyone.”

Despite these advancements, there is a perception among some that AI is still a long way from posing any real danger. To this, Yampolskiy counters: “Some people call it glorified statistics, but these models are already smarter than most humans in specific domains. Just because they seem ‘laughable’ in some tasks doesn’t mean they aren’t a threat.”

Yampolskiy also challenges the commonly held belief that we can easily “align” AI systems with human values, making them safe.

“We don’t even know who we’re aligning with,” he pointed out. “Is it the programmer, the owner, 8 billion humans, or the world’s ecosystems? We don’t agree on our own values — how can we expect to program AI with a unified moral code?”

The AI safety expert warned that time is running out, as researchers and corporations race ahead with each new iteration of AI models.

“Delaying the inevitable is all we have,” said Yampolskiy, pointing to the “Pause AI” movement, which aims to give humanity time to figure out how to manage these technologies before we reach a tipping point. However, Yampolskiy admitted that such efforts are being largely ignored. “Every time we face a junction — where we could choose safety over capability — we choose the more dangerous path.”

In the end, Yampolskiy offers a sobering perspective on the future of AI: “I have a high level of concern — 99.999% — that eventually we will create a runaway AI, and it’s just a matter of time. We need to think long and hard about what kind of world we’re building.”

As the world continues to grapple with the complexities of AI, voices like Yampolskiy’s serve as a critical reminder that, without careful consideration, we may be rushing into an uncertain and potentially perilous future.