Insider Brief

- Researchers at Fuzhou University in China have developed a machine vision sensor that mimics the human eye’s rapid light adaptation, aiming to improve vision systems in robotics and autonomous vehicles.

- Published in Applied Physics Letters by AIP Publishing, the study introduces a quantum dot-based sensor that adjusts to lighting changes in 40 seconds, outperforming the human eye and reducing computational load through retina-like data filtering.

- The layered device design—combining lead sulfide quantum dots, polymer, and zinc oxide—demonstrates promise for scaling into edge-AI arrays and smart transportation platforms facing abrupt light transitions.

A research team in China has developed a machine vision sensor that mimics the human eye’s ability to adapt to sudden changes in light—potentially transforming how robots and autonomous vehicles see in real-world conditions.

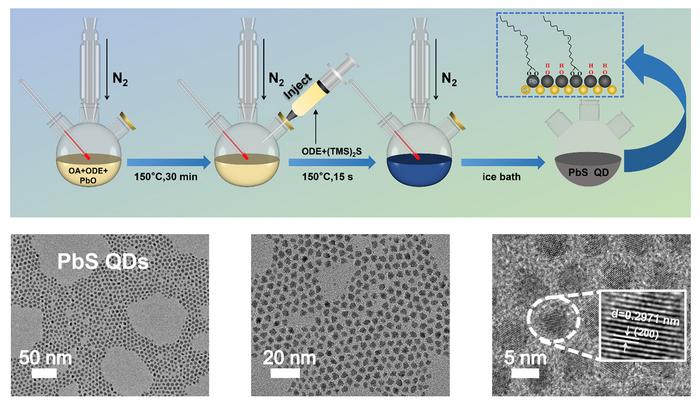

According to the American Institute of Physics, a paper published this week in Applied Physics Letters by AIP Publishing, researchers at Fuzhou University described a bio-inspired sensor that uses engineered quantum dots—nanoscale semiconductors—to adjust to lighting shifts in about 40 seconds, more than twice as fast as the human eye. The device is designed to function like the visual system, dynamically managing electrical signals in response to brightness levels and filtering visual data at the source.

“Our innovation lies in engineering quantum dots to intentionally trap charges like water in a sponge then release them when needed — similar to how eyes store light-sensitive pigments for dark conditions,” noted author Yun Ye.

The implications are wide-reaching. Current machine vision systems, often used in robotics and driverless cars, tend to process every detail captured by the camera, leading to wasted energy and slower response times. The Fuzhou team’s approach bypasses that by prioritizing relevant information the way a human retina does. Their device adapts to both glare and darkness while reducing the computational burden on downstream systems.

At the heart of the technology is a layered sensor structure: lead sulfide quantum dots embedded between polymer and zinc oxide films, topped with specialized electrodes. The quantum dots act like visual memory, capturing light-based information and releasing it strategically, which mirrors how the eye stores and uses light-sensitive pigments.

According to the researchers, this allows the sensor not only to adjust quickly to lighting shifts, such as driving from a dark tunnel into bright sunlight, but also to process visual information more efficiently. By filtering and preprocessing the light at the sensor level, the system eliminates the need for bulkier computing hardware or external algorithms to make sense of visual input.

The study links advances in quantum materials with bio-inspired engineering, using insights from neuroscience to rethink how machines see. While traditional machine vision systems rely on rigid processing rules, this sensor integrates a more organic and flexible design, capable of learning and adjusting based on previous exposure—much like the human visual system.

The researchers noted that their device currently works with single sensor units but anticipate scaling up to larger sensor arrays. They are also exploring edge-AI integration, which would allow artificial intelligence processing to occur directly on the sensor chip itself, further reducing latency and power consumption.

Looking ahead, the team plans to develop applications for smart cars and autonomous robots that must operate under rapidly changing lighting scenarios. If successful, the technology could pave the way for low-power, high-reliability vision systems that help machines operate safely and effectively in environments where current sensors fall short.

“Immediate uses for our device are in autonomous vehicles and robots operating in changing light conditions like going from tunnels to sunlight, but it could potentially inspire future low-power vision systems,” Ye pointed out. “Its core value is enabling machines to see reliably where current vision sensors fail.”