- Researchers at Tohoku University and collaborators have created TactileAloha, a robotic system that integrates vision and touch, achieving higher task success rates than vision-only approaches.

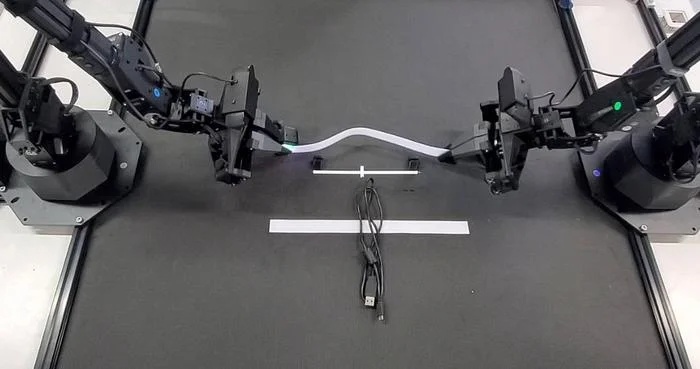

- Built on Stanford’s open-source ALOHA dual-arm platform, the system uses vision-tactile transformer technology to make decisions based on both appearance and texture, improving performance in tasks like handling Velcro and zip ties.

- Published in IEEE Robotics and Automation Letters, the work advances multimodal physical AI, with potential applications in household assistance, industrial automation, and adaptive human-robot interaction.

Researchers at Tohoku University and international collaborators have developed a robotic system that combines vision and touch to perform everyday tasks with greater success than conventional approaches. The study, published in IEEE Robotics and Automation Letters, demonstrates how integrating multiple sensory inputs enables more adaptive and accurate robotic control, according to the university.

As researchers noted, humans seamlessly merge sight and touch when performing simple actions, like picking up a coffee cup. Most robotic systems, however, rely heavily on vision, which limits their ability to distinguish object properties such as texture or orientation. An example researchers use is, telling the front from the back of Velcro is far easier by touch than by sight. To address this, the team built a system called TactileAloha, extending Stanford University’s open-source ALOHA dual-arm robot platform.

“To overcome these limitations, we developed a system that also enables operational decisions based on the texture of target objects – which are difficult to judge from visual information alone,” noted Mitsuhiro Hayashibe, a professor at Tohoku University’s Graduate School of Engineering. “This achievement represents an important step toward realizing a multimodal physical AI that integrates and processes multiple senses such as vision, hearing, and touch – just like we do.”

TactileAloha employs vision-tactile transformer technology, allowing robots to make operational decisions based on both how objects look and how they feel. In tests involving materials like Velcro and zip ties—where texture and front-back differences matter—the system enabled robots to carry out bimanual operations with higher accuracy and flexibility. Compared to vision-only methods, the tactile-augmented approach achieved higher task success rates.

The work represents a step toward developing multimodal physical AI, where machines integrate and process multiple senses—vision, hearing, and touch—similar to humans. Potential applications range from household assistance to industrial automation, where adaptive manipulation is critical.

The research team included Tohoku University’s Graduate School of Engineering, the Centre for Transformative Garment Production at Hong Kong Science Park, and the University of Hong Kong.