Insider Brief

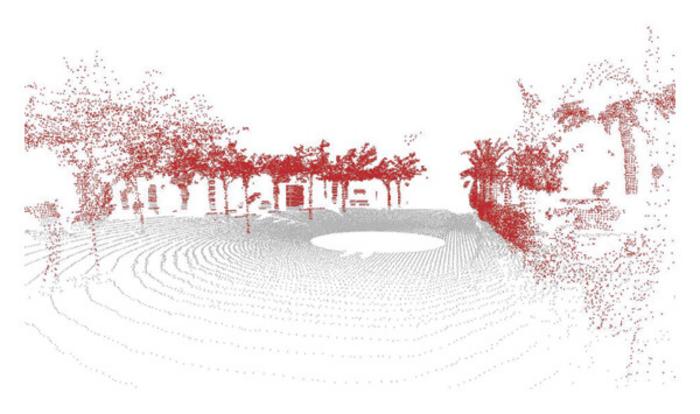

- Researchers at Miguel Hernández University of Elche developed MCL-DLF, a hierarchical 3D LiDAR localization system designed to help mobile robots recover and maintain accurate positioning in large, changing indoor and outdoor environments.

- The framework addresses the “kidnapped robot” problem by combining coarse global feature recognition with fine local feature analysis, integrating deep learning and probabilistic Monte Carlo Localization to update multiple pose hypotheses in real time.

- In multi-month campus tests, the system achieved higher positional accuracy and lower variability than conventional approaches, supporting applications in service robotics, warehouse automation, infrastructure inspection and environmental monitoring.

Robots navigating indoors or around buildings cannot rely solely on satellite signals, which often degrade or disappear. A new study from Miguel Hernández University of Elche (UMH) proposes a hierarchical 3D LiDAR localization framework designed to help mobile robots recover and maintain accurate positioning over long periods in dynamic environments.

Published in the International Journal of Intelligent Systems, the research introduces MCL-DLF (Monte Carlo Localization – Deep Local Feature), a coarse-to-fine localization system validated over several months across the UMH Elche campus in both indoor and outdoor settings.

The study was funded by Spain’s Ministry of Science, Innovation and Universities and the State Research Agency, with additional support from the European Regional Development Fund and the regional government of Valencia through its PROMETEO research program.

How Does the Study Address the “Kidnapped Robot” Problem?

The system addresses the “kidnapped robot” problem — a scenario in which a robot loses knowledge of its pose after being powered off, displaced, or physically moved. In such cases, the robot must re-estimate its location without relying on prior initialization.

The researchers, Míriam Máximo, Antonio Santo, Arturo Gil, Mónica Ballesta and David Valiente at the Engineering Research Institute of Elche at Miguel Hernández University in Spain, said they have developed a two-stage hierarchical approach inspired by human orientation strategies:

- Coarse localization: The robot first identifies its approximate region using global structural features extracted from 3D LiDAR point clouds, such as buildings or vegetation.

- Fine localization: Once narrowed to a region, the system analyzes detailed local features to determine precise position and orientation.

Instead of relying on predefined geometric rules, the framework integrates deep learning to extract discriminative local features from 3D point clouds. These learned features are fused with probabilistic Monte Carlo Localization, which maintains and updates multiple pose hypotheses as new sensor data are received, according to UMH.

How Does it Perform?

Long-term deployment tests showed that MCL-DLF achieved higher positional accuracy than conventional localization methods, with comparable or improved orientation estimates along certain trajectories, researchers noted. The system also demonstrated lower variability across time, indicating robustness to seasonal changes, vegetation growth and structural variation.

This stability is critical for long-term autonomous operation in real-world environments, particulary outdoors, where appearance shifts over weeks or months.

What are the Applications and Implications?

Reliable localization underpins service robotics, warehouse automation, infrastructure inspection, environmental monitoring and autonomous vehicles, UMH noted. Systems capable of recovering from pose loss without external infrastructure move robots closer to sustained operation in large, dynamic spaces.