Insider Brief

- IBM’s Spyre accelerator chip, with 32 cores and 25.6 billion transistors, is designed to scale enterprise AI workloads efficiently on IBM Z systems.

- Spyre’s architecture reduces latency and energy use by optimizing data flow directly between compute engines and supports lower precision numeric formats like int4 and int8.

- IBM aims to expand Spyre’s capabilities beyond AI inferencing, exploring model fine-tuning and training on mainframes while maintaining data security and regulatory compliance.

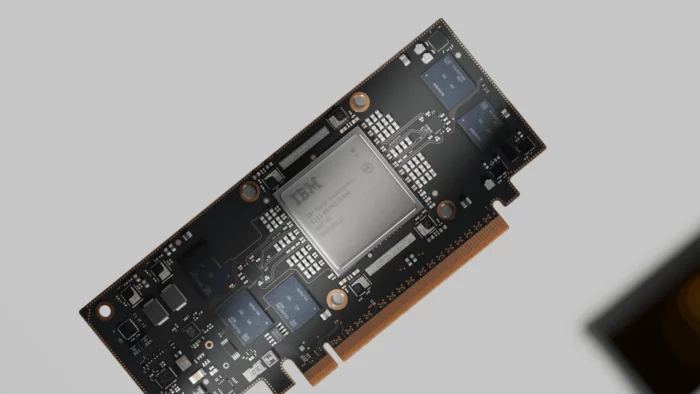

At Hot Chips 2024 in Palo Alto, California, IBM introduced its latest addition to the AI hardware landscape—the Spyre accelerator, according to a post on the IBM Research blog. Designed for IBM Z systems, Spyre aims to enhance enterprise AI capabilities, bringing a new level of precision to AI inferencing and expanding the computational power available for enterprise-level AI workloads.

Technical Specifications and Design

The Spyre accelerator is a product of collaboration between IBM Z and IBM Research, representing a significant leap from its predecessors, according to the blog. The team writes that they chip features 32 individual accelerator cores and contains an impressive 25.6 billion transistors, connected by 14 miles of wire. Manufactured using a 5-nanometer node process, Spyre exemplifies the efficiency and scalability required for the next generation of AI workloads in enterprise environments.

Mounted on a PCIe card, the Spyre accelerator allows for modular scalability. A single IBM Z system can cluster up to eight Spyre cards, providing an additional 256 accelerator cores. This modularity ensures that as enterprise AI demands grow, organizations can incrementally expand their computational resources without overhauling existing infrastructure.

Performance and Energy Efficiency

The architecture of the Spyre chip is optimized specifically for AI tasks, distinguishing it from conventional CPUs. Traditional processors shuttle instructions and data back and forth between the processing unit and memory, a process that can create bottlenecks in data-intensive operations like AI. In contrast, Spyre’s design enables data to flow directly from one compute engine to the next, reducing latency and energy consumption.

The post indicates that the Spyre accelerator supports a variety of lower precision numeric formats, such as int4 and int8, which are particularly suited for AI inferencing. These formats not only conserve memory but also improve the energy efficiency of AI model execution. This focus on efficient computation positions Spyre as a critical tool for organizations looking to balance performance with sustainability.

Enterprise Application and Integration

IBM Z mainframes currently process around 70% of global transactions by value, underscoring the importance of integrating AI capabilities into these systems. The Spyre accelerator is designed to facilitate the deployment of sophisticated AI models on IBM Z, enabling enterprises to perform tasks like fraud detection with greater accuracy and speed.

The implications of this advance might extend beyond simple transactional analysis, the team suggests. With the Spyre accelerator, IBM Z systems can now handle more complex AI models, capable of identifying intricate patterns that may elude less powerful systems. This capability is particularly valuable in sectors where precision and reliability are especially required, such as finance, healthcare and supply chain management.

Future Directions

IBM’s roadmap for the Spyre accelerator and IBM Z systems is ambitious. The current focus is on expanding the capabilities of AI inferencing within enterprise environments, but IBM Research is already looking ahead. Teams are working on methods to bring model fine-tuning and potentially even AI training onto the mainframe itself. This development could revolutionize how organizations handle sensitive data, allowing them to train and deploy AI models entirely on-premises, thereby maintaining strict data security and compliance with regulatory standards.

The Spyre accelerator also integrates seamlessly with IBM’s watsonx AI and data platform, enhancing tools like watsonx Code Assistant. This synergy allows for more efficient modernization of legacy code bases on mainframes, leveraging generative AI to automate and optimize the process. As IBM continues to refine this technology, the possibilities for AI-driven enterprise transformation seem boundless, with each new iteration of IBM Z systems poised to push the envelope further.

Industry Impact and Future Prospects

The introduction of the Spyre accelerator signals IBM’s continued commitment to leadership in the enterprise computing space. By equipping IBM Z systems with advanced AI capabilities, IBM is not only enhancing the performance of existing applications but also paving the way for new, more complex use cases that require robust AI infrastructure.

Looking forward, The AI Inisder team speculates that IBM’s focus will likely shift towards enabling more comprehensive AI functions on the mainframe, including training and fine-tuning models in highly secure environments. This direction aligns with the growing demand for AI solutions that can operate within stringent regulatory frameworks, particularly in industries like finance and healthcare.