Insider Brief

- A new study posted to arXiv argues that small language models (SLMs) are better suited than large models for most AI agent tasks.

- Researchers say SLMs are cheaper, faster, and flexible enough to replace up to 70% of large-model calls in some systems.

- The paper outlines methods to shift from large to small models and predicts hybrid systems will dominate the industry.

Small language models may hold the key to the future of AI agents, according to a new study posted on arXiv by researchers at NVIDIA and Georgia Institute of Technology. The paper argues that compact models are not only powerful enough for many everyday tasks but are also cheaper, faster, and more sustainable than today’s massive language systems.

The study, Small Language Models are the Future of Agentic AI, contends that large language models (LLMs) are overused in agentic AI — or systems in which software agents complete tasks autonomously by invoking tools, reasoning about steps, and interacting with users. While LLMs dominate the infrastructure powering these systems, the researchers say most agentic tasks are repetitive, narrow in scope, and don’t require the broad conversational skills of large models.

Instead, the paper concludes that small language models (SLMs), typically with fewer than 10 billion parameters, are “sufficiently powerful” for the bulk of these jobs. Modern examples, including Microsoft’s Phi-3 small, NVIDIA’s Nemotron-H, HuggingFace’s SmolLM2, and DeepSeek-R1 distillations, already show performance comparable to much larger models while running at a fraction of the cost. Benchmarks cited in the study suggest SLMs can handle commonsense reasoning, code generation, and tool calling with accuracy rivaling or surpassing earlier generations of LLMs.

Less Expensive, More Flexible

The implications could be significant, according to the researchers, pointing out that running a 7 billion–parameter model costs 10 to 30 times less in energy and latency than operating a frontier-scale system with hundreds of billions of parameters. Because agentic AI is projected to grow from a $5.2 billion market in 2024 to nearly $200 billion by 2034, the authors argue that a shift toward SLMs could save billions in infrastructure costs and reduce the environmental burden of large-scale AI computing.

Beyond economics, the researchers highlight flexibility. Small models can be fine-tuned cheaply and quickly — often overnight — to specialize in tasks such as formatting outputs, parsing commands, or generating boilerplate code. That agility allows companies to adapt systems more rapidly to user demands or regulatory requirements. The study also frames SLM adoption as a democratizing force, lowering barriers for smaller organizations to develop capable agents rather than relying on centralized cloud services tied to costly LLM APIs.

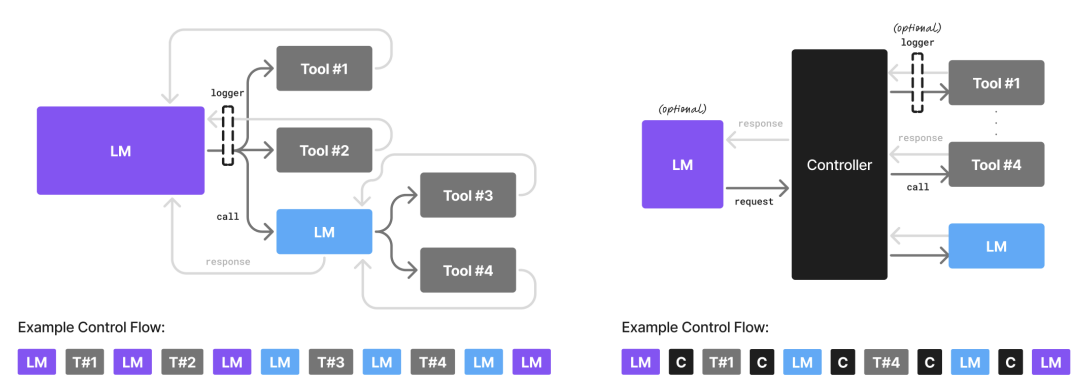

In cases where broader conversational ability is needed, the paper suggests hybrid systems: agents that rely on SLMs for most work but selectively invoke large models for open-ended reasoning or complex dialogue. This modular approach, the researchers argue, offers the best balance of efficiency and capability.

How The Researchers Conducted The Study

The researchers conducted detailed comparisons between model families, focusing on metrics such as reasoning accuracy, instruction-following performance and cost of inference. It also outlines a conversion algorithm to help developers transition existing LLM-powered agents to SLM-first architectures. That process includes collecting agent interaction data, clustering tasks into categories, fine-tuning small models for those categories, and iteratively refining them as more usage data becomes available.

The researchers also present case studies of open-source agents — MetaGPT, Open Operator, and Cradle — to estimate the share of large-model queries that could be replaced. In these examples, they found between 40% and 70% of LLM calls could be handled reliably by specialized SLMs, with the remaining queries requiring the broader abilities of larger models.

Barriers to Adoption

The researchers acknowledge that there are still barriers to adoption. Billions of dollars have already been invested in centralized LLM infrastructure, creating inertia that favors continued reliance on large models. Training and evaluation benchmarks also tend to emphasize general language performance, which favors large systems, rather than narrow agentic tasks where SLMs excel. Finally, smaller models have received less public attention and marketing, leaving many enterprises unaware of their potential.

The authors also address counterarguments. Some researchers argue that scaling laws guarantee LLMs will always outperform smaller models in general language tasks. Others suggest centralized inference of large models may be cheaper at scale. The paper concedes that these views carry weight but maintains that specialization and modularity make SLMs the more pragmatic choice for most agentic AI scenarios.

Looking ahead, the study calls for wider experimentation with SLM deployment and for industry benchmarks that better reflect the demands of agentic systems. It predicts that heterogeneous architectures, where small and large models work side by side, will become the norm. The authors stress that cost savings and efficiency gains from even partial replacement of LLMs could reshape the economics of the AI industry.

They also highlight sustainability. Smaller models consume less energy and require fewer data center resources. As demand for AI infrastructure grows, the researchers suggest that environmental concerns will become a decisive factor in favor of compact models.

Call For More Discussion

The researchers say the implications of agentic AI on the economy and society, itself, make more investigations and more conversations with experts necessary.

They write: “The agentic AI industry is showing the signs of a promise to have a transformative effect on white

collar work and beyond. It is the view of the authors that any expense savings or improvements on the sustainability of AI

infrastructure would act as a catalyst for this transformation, and that it is thus eminently desirable to explore all options for doing so. We therefore call for both contributions to and critique of our position, to be directed to agents@nvidia.com, and commit to publishing all such correspondence at research.nvidia.com/labs/lpr/slm-agents.”