Insider Brief

- MIT researchers have developed an AI-based system that enables robots to generate fast, accurate 3D maps from uncalibrated onboard cameras, addressing scalability limitations in SLAM for real-time navigation.

- The method incrementally creates and aligns submaps using mathematical transformations, avoiding the need for global optimization or special sensors, and achieved sub-5 cm reconstruction errors in tests using standard cellphone video.

- The system, to be presented at NeurIPS 2025, was developed by Dominic Maggio, Hyungtae Lim, and Luca Carlone with support from the U.S. NSF, ONR, and the National Research Foundation of Korea.

MIT researchers have developed a new AI-driven system that enables robots to generate fast, accurate 3D maps of complex environments using uncalibrated onboard cameras that addresses a longstanding challenge in real-time navigation for search-and-rescue and other field robotics.

The work, to be presented at NeurIPS 2025, was supported by the U.S. National Science Foundation, Office of Naval Research, and National Research Foundation of Korea.

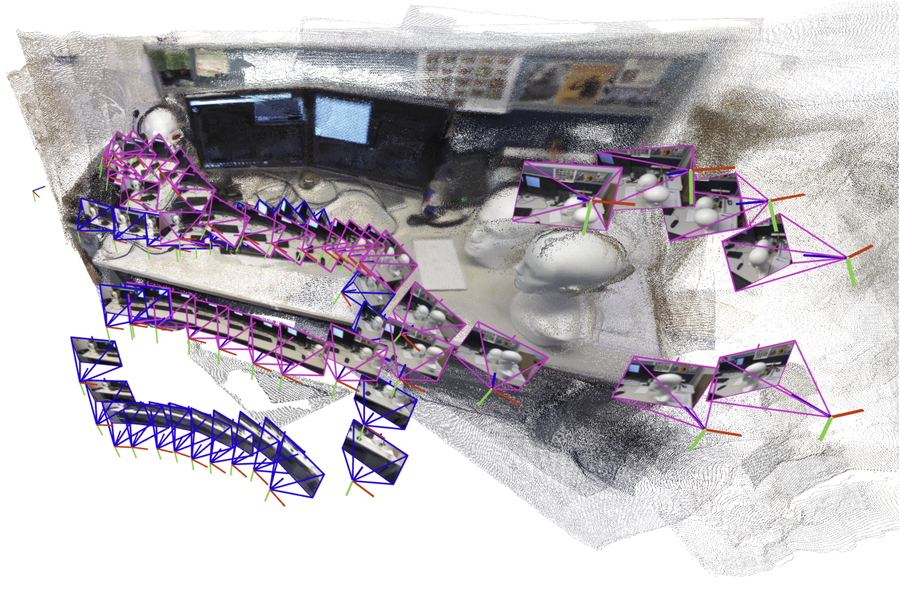

According to MIT, the new method tackles a key limitation of current machine learning models for simultaneous localization and mapping (SLAM), which typically process only a few dozen images at a time and fail to scale in time-sensitive, large-scale scenarios. Instead of relying on global optimization or camera calibration, the MIT approach incrementally generates smaller submaps from image data and uses mathematical transformations to consistently align these into full 3D reconstructions.

“For robots to accomplish increasingly complex tasks, they need much more complex map representations of the world around them. But at the same time, we don’t want to make it harder to implement these maps in practice. We’ve shown that it is possible to generate an accurate 3D reconstruction in a matter of seconds with a tool that works out of the box,” noted MIT graduate student and lead author of a paper on this method, Dominic Maggio.

The method draws on geometric insights from classical computer vision to resolve local distortions and ambiguities introduced by learning-based models, enabling reliable submap stitching without complex tuning, according to MIT.

Tested on scenes such as office corridors and the MIT Chapel using only cellphone video, the system achieved real-time performance with average 3D reconstruction errors under 5 centimeters. It also estimated the robot’s position in parallel, supporting closed-loop navigation. In comparative tests, the model outperformed other recent approaches in both speed and accuracy, and required no special sensors or hardware.

The research, which will be presented at the Conference on Neural Information Processing Systems, was conducted by Maggio, postdoc Hyungtae Lim, and senior author Luca Carlone, an associate professor in MIT’s Department of Aeronautics and Astronautics and director of the SPARK Lab.

MIT researchers plan to enhance their AI-driven mapping system to handle more complex environments and aim to deploy it on real-world robots operating in challenging conditions.

The work, to be presented at NeurIPS 2025, was supported by the U.S. National Science Foundation, Office of Naval Research, and National Research Foundation of Korea.