Insider Brief

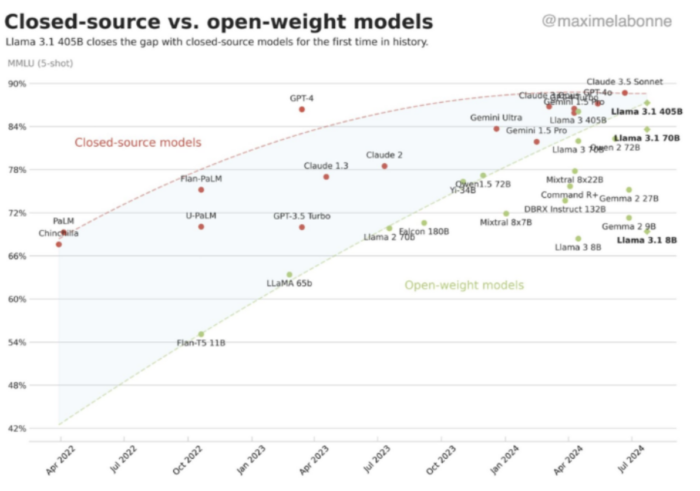

- Galileo’s benchmark reveals open-source language models are quickly closing the performance gap with proprietary models, reshaping the AI landscape.

- Anthropic’s Claude 3.5 Sonnet tops the index, outperforming OpenAI and highlighting a shift in the AI arms race.

- Alibaba’s Qwen2-72B-Instruct leads among open-source models, indicating global competition and democratization of AI technology.

Artificial intelligence startup Galileo has released a comprehensive benchmark that appears to show a significant shift in the AI landscape. According to their findings, open-source language models are rapidly closing the performance gap with proprietary counterparts, potentially democratizing advanced AI capabilities and accelerating innovation across various industries. As reported by VentureBeat, this shift could lower barriers to entry for startups and researchers while pressuring established players to innovate more rapidly or risk losing their edge.

The second annual Hallucination Index from Galileo evaluated 22 leading large language models on their tendency to generate inaccurate information. While closed-source models still lead overall, the margin has narrowed significantly in just eight months.

“The huge improvements in open-source models was absolutely incredible to see,” said Vikram Chatterji, co-founder and CEO of Galileo, in an interview with VentureBeat. “Back then [in October 2023] the first five or six were all closed source API models, mostly OpenAI models. Versus now, open source has been closing the gap.”

Changing of the Guard: Anthropic’s Claude 3.5 Sonnet Tops Index

Anthropic’s Claude 3.5 Sonnet emerged as the best-performing model across all tasks, dethroning OpenAI’s offerings that dominated last year’s rankings, according to Chatterji. This shift indicates a changing of the guard in the AI arms race, with newer entrants challenging established leaders.

“We were extremely impressed by Anthropic’s latest set of models,” Chatterji said in the interview. “Not only was Sonnet able to perform excellently across short, medium, and long context windows, scoring an average of 0.97, 1, and 1 respectively across tasks, but the model’s support of up to a 200k context window suggests it could support even larger datasets than we tested.”

The index also highlighted the importance of considering cost-effectiveness alongside raw performance. Google’s Gemini 1.5 Flash emerged as the most efficient option, delivering strong results at a fraction of the price of top models. “The dollar per million prompt tokens cost for Flash was $0.35, but it was $3 for Sonnet,” Chatterji told VentureBeat. “When you look at the output, dollars per million response token cost, it’s about $1 for Flash, but it’s $15 for Sonnet. So now anyone who’s using Sonnet immediately has to have money in the bank, which is like, at least like 15 to 20x more, whereas literally Flash is not that much worse at all.”

Global Competition: Alibaba’s Open-Source Model Makes Waves

Alibaba’s Qwen2-72B-Instruct performed best among open-source models, scoring highly on short and medium-length inputs. This success signals a broader trend of non-U.S. companies making significant strides in AI development, challenging the notion of American dominance in the field. Chatterji sees this as part of a larger democratization of AI technology.

“What I see this unlocking—using Llama 3, using Qwen—teams across the world, across different economic strata, can just start building really incredible products,” he told VentureBeat.

Chatterji added that these models are likely to become optimized for edge and mobile devices, leading to “incredible mobile apps and web apps and apps on the edge being built out with these open source models.”

The index introduced a new focus on how models handle different context lengths, from short snippets to long documents, reflecting the growing use of AI for tasks like summarizing lengthy reports or answering questions about extensive datasets.

“We focused on breaking that down based on context length — small, medium, and large,” Chatterji told VentureBeat. “That and the other big piece here was cost versus performance. Because that’s very top of mind for people.”

The index also revealed that bigger isn’t always better when it comes to AI models. In some cases, smaller models outperformed their larger counterparts, suggesting that efficient design can sometimes trump sheer scale.

“The Gemini 1.5 Flash model was an absolute revelation for us because it outperformed larger models,” Chatterji said. “This suggests that if you have great model design efficiency, that can outweigh the scale.”

Future of Language Models: Cost-Effective AI Integration

Galileo’s findings could reverberate across the industry and influence enterprise AI adoption. As open-source models improve and become more cost-effective, companies may deploy powerful AI capabilities without relying on expensive proprietary services. This could lead to more widespread AI integration across industries, potentially boosting productivity and innovation.

Galileo, which provides tools for monitoring and improving AI systems, is positioning itself as a key player in helping enterprises navigate the rapidly evolving landscape of language models. By offering regular, practical benchmarks, Galileo aims to become an essential resource for technical decision-makers.

“We want this to be something that our enterprise customers and our AI team users can just use as a powerful, ever-evolving resource for what’s the most efficient way to build out AI applications instead of just, you know, feeling through the dark and trying to figure it out,” Chatterji told the technology news site.

As the AI arms race intensifies and with new models being released almost weekly, Galileo plans to update the benchmark quarterly, providing ongoing insight into the shifting balance between open-source and proprietary AI technologies.

Looking ahead, Chatterji anticipates further developments in the field. “We’re starting to see large models that are like operating systems for this very powerful reasoning,” he said. “And it’s going to become more and more generalizable over the course of the next maybe one to two years, as well as see the context lengths that they can support, especially on the open source side, will start increasing a lot more. Cost is going to go down quite a lot, just the laws of physics are going to kick in.”