Insider Brief

- Google DeepMind unveiled Gemini Robotics‑ER 1.5 (embodied‑reasoning VLM) and Gemini Robotics 1.5 (vision‑language‑action) to push “physical AI” so robots can perceive, plan, use tools and act on multi‑step tasks; Robotics‑ER 1.5 is available via the Gemini API and Robotics 1.5 to select partners.

- The models work in an agentic framework where Robotics‑ER 1.5 creates stepwise plans and can call tools (e.g., Search), while Robotics 1.5 performs pre‑action reasoning to convert visual context and natural‑language instructions into motor commands and transfer skills across different robot embodiments.

- Google DeepMind reports state‑of‑the‑art results on embodied spatial benchmarks for Robotics‑ER 1.5 and says safety is layered via upgraded ASIMOV evaluations and governance, with developer access and documentation in Google AI Studio to support general‑purpose robotics.

Google DeepMind introduced two models aimed at making robots more capable in the physical world, positioning its Gemini platform to handle perception, planning, tool use and action across multi‑step tasks, according to the company. The embodied‑reasoning model Gemini Robotics‑ER 1.5 is available to developers now via the Gemini API in Google AI Studio, while the vision‑language‑action model Gemini Robotics 1.5 is available to select partners, Google DeepMind Senior Director and Head of Robotics Carolina Parada announced in a blog post.

Most daily tasks demand context and multiple steps, which still trip up robots. Parada used recycling as example, pointing out that to sort objects into compost, recycling, and trash “based on my location,” a robot must look up local rules online, inspect the items in front of it, decide how each maps to those rules, and then carry out the full sequence to put everything away. To make these complex, multi‑step jobs achievable, Google DeepMind designed two models that work together in an agentic framework—one to plan and reason about the task in context, and another to translate visual understanding and natural‑language instructions into precise motor commands—so the system can think before acting and execute the steps end to end.

What’s New

Gemini Robotics 1.5 is a vision‑language‑action (VLA) system that turns visual context and natural‑language instructions into motor commands. It is designed to “think before acting,” generating a stepwise rationale and exposing that process in plain language to make decisions more transparent, according to Google DeepMind. The model also learns across embodiments so skills trained on one robot can transfer to others, accelerating deployment.

Gemini Robotics‑ER 1.5 is a vision‑language model (VLM) optimized for embodied reasoning. Acting as a high‑level controller, it creates detailed, multi‑step plans, reasons about spatial relationships, estimates progress, and natively calls digital tools—such as Google Search or user‑defined functions—to fetch rules or information required to complete a mission.

How the Models Work Together

In Google DeepMind’s agentic framework, Robotics‑ER 1.5 orchestrates a task and issues natural‑language step instructions; Robotics 1.5 executes those steps by coupling vision with action. The pairing is meant to help robots handle longer, more varied jobs in cluttered, changing environments—for example, sorting items by local recycling rules requires web lookups, visual understanding and precise manipulation. Both models build on the core Gemini family but are fine‑tuned on different datasets to specialize for their roles, the company said.

Benchmarks and Capabilities

Google DeepMind attributes Robotics‑ER 1.5’s gains to stronger spatial understanding and long‑horizon planning, with top aggregated scores on academic suites such as ERQA, Point‑Bench, RefSpatial, RoboSpatial‑Pointing, Where2Place, BLINK, CV‑Bench, EmbSpatial, MindCube, RoboSpatial‑VQA, SAT, Cosmos‑Reason1, Min Video Pairs, OpenEQA and VSI‑Bench. Robotics 1.5’s “think‑then‑act” behavior lets it break lengthy goals into manageable segments and generalize to new tasks.

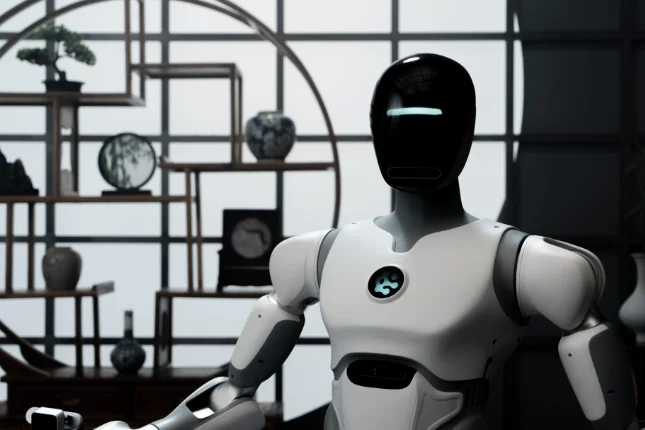

A notable result is cross‑embodiment learning: motions acquired on one platform (for instance, an ALOHA 2 setup) can transfer to others (such as Apptronik’s Apollo humanoid or a bi‑arm Franka system) without bespoke re‑training. Google DeepMind positions this as a way to cut integration time and make physical agents more broadly useful across hardware.

Safety and Governance

Google DeepMind said it is layering into the agent stack. A Responsibility & Safety Council and a Responsible Development & Innovation team support the robotics group to align with the company’s AI Principles. Robotics 1.5 applies high‑level semantic safety checks—“thinking about safety before acting”—and ties into low‑level onboard safety subsystems like collision avoidance when needed, the company pointed out. To evaluate progress, the company upgraded its ASIMOV benchmark with better tail coverage, richer annotations, new question types and video modalities; Robotics‑ER 1.5 shows state‑of‑the‑art results on the suite, according to Google DeepMind.

Developer Access and What Comes Next

Developers can try Gemini Robotics‑ER 1.5 today through the Gemini API in Google AI Studio; Gemini Robotics 1.5 is limited to select partners during the initial rollout. Google DeepMind said documentation, examples and a technical report on safety accompany the release, and it frames the two‑model approach as a milestone toward general‑purpose robots that plan, reason and use tools to operate safely around people.

The full tech report can be found here.