Insider Brief

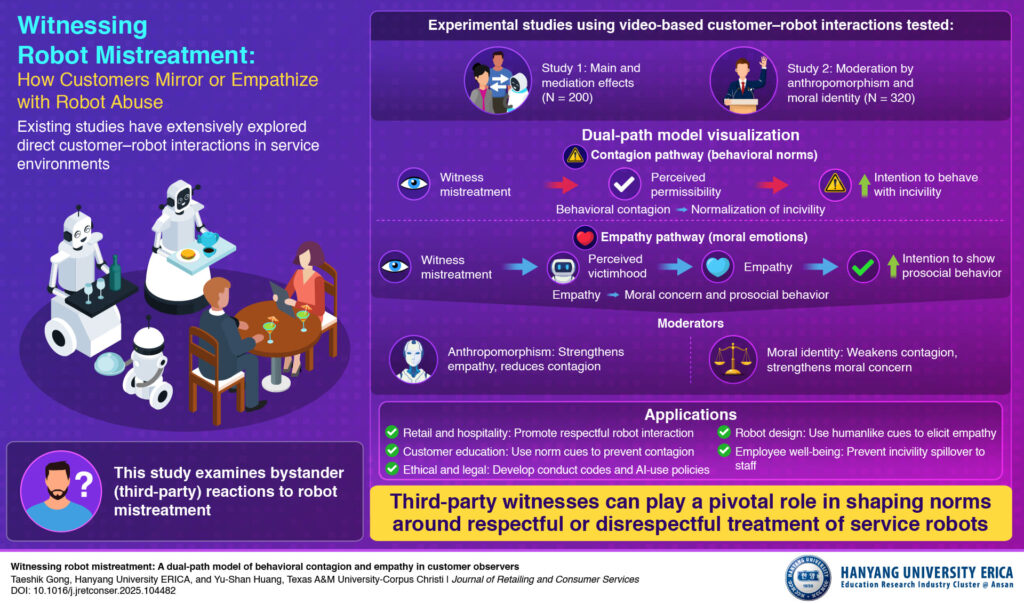

- Research from Hanyang University ERICA shows that witnessing mistreatment of service robots triggers either empathy or behavioral contagion, shaping customer behavior toward robots.

- The study identifies two pathways—empathy, which fosters prosocial behavior, and behavioral contagion, which normalizes incivility—mediated by the robot’s humanlike traits and the observer’s moral identity.

- Findings suggest design and policy interventions, such as humanlike cues, prosocial messaging, and ethical conduct guidelines, can promote respectful human–robot interactions in service industries.

- Image: Photo by TyliJura

PRESS RELEASE — Research from Hanyang University ERICA examines how customers respond when witnessing another customer mistreat a service robot. The study identifies two psychological pathways—behavioral contagion, which normalizes incivility, and empathy, which fosters prosocial behavior. These responses are influenced by the robot’s anthropomorphism and the observer’s moral identity, offering insights to guide ethical robot design and service management in hospitality, retail, and healthcare.

While many studies have explored direct human–robot interactions in sectors such as hospitality, retail, and healthcare, little is known about how customers react when they witness another person mistreating a service robot—a growing concern in technology-enabled service environments.

In a recent study, a research team led by Professor Taeshik Gong of Hanyang University ERICA, Republic of Korea, addressed this gap through experimental studies using video-based customer–robot interactions. The findings were published online on 20 August 2025 and have been published in Volume 88 of the Journal of Retailing and Consumer Services.

The researchers identify two distinct psychological pathways—behavioral contagion, which leads to the normalization of incivility, and empathy, which elicits moral concern and prosocial behavior. The findings show that whether observers mimic the incivility or respond with empathy depends on factors such as the robot’s humanlike design and the observer’s moral identity. This is the first empirical evidence that third-party observers can play a pivotal role in shaping norms around respectful or disrespectful treatment of service robots, with important implications for the design, deployment, and ethical use of robots in hospitality, retail, and other service settings.

These findings are expected to revolutionize diverse fields. “An application of our research is in hotels, restaurants, airports, and retail stores, where robots increasingly serve customers. Managers can design training protocols for employees and visible norm cues such as signage, scripted responses, and pre-recorded reminders to discourage customer mistreatment of robots. This helps prevent a contagion of incivility among bystanders and promotes a respectful service climate,” explains Prof. Gong.

Notably, this study shows that anthropomorphism influences empathy. Designers can use humanlike cues, including expressive eyes, emotive voices, and subtle gestures, in frontline robots to increase bystanders’ empathic responses when mistreatment occurs. This does not just improve likability, but also acts as a moral signal—encouraging customers to treat robots with more dignity and reducing the spread of abusive behavior.

Moreover, companies can integrate prosocial messaging into customer touchpoints. These interventions raise awareness that mistreating robots has social and ethical implications, even if the robots themselves are nonhuman. This research also provides evidence to support emerging policy discussions about whether and how robots should be protected in public spaces. If observers perceive robots as legitimate victims under certain conditions, organizations or regulators may consider codes of conduct or ethical guidelines for human–robot interaction in customer-facing settings.

Prof. Gong says, “Even though the study focuses on customers, the findings matter for employees too. If the mistreatment of robots is normalized, this may spillover into how customers treat human staff. Conversely, reinforcing prosocial norms toward robots can strengthen the overall moral climate of the service environment, benefiting employees’ dignity and customer satisfaction simultaneously. Within a decade, we may see relevant codes of conduct, workplace guidelines, or even legal provisions.”

Overall, this breakthrough work can pave the way for normal, respectful human–robot interaction, influence robot design standards and ethical and policy frameworks, spillover into human relationships, and prepare us for human–AI futures. These findings also call for ethical robot-use guidelines to ensure respectful and prosocial human–AI interactions.